Download the full dataset used in the papers.

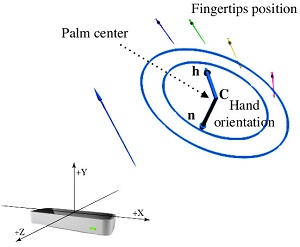

This dataset contains gestures performed by 14 different people, each performing 10 different gestures repeated 10 times each, for a total of 1400 gestures. Data from both the Kinect and the Leap motion have been acquired, with the setup shown in the figure above. Calibration parameters for the Kinect are also provided. Leap data consist in all the parameters provided by the Leap SDK (with the version v1 as available in Spring 2014) .

Microsoft Kinect

|

Leap Motion

|

Creative Senz3D

We provide the dataset used for papers [1] and [2]. The dataset contains several different static gestures acquired with the Creative Senz3D camera. It has been exploited to test the prediction accuracy of a Multi-Class SVM gesture classifier trained on synthetic data generated with HandPoseGenerator . Please cite the papers [1]and [2] if you use this dataset.

[1] A. Memo, L. Minto, P. Zanuttigh, "Exploiting Silhouette Descriptors and Synthetic Data for Hand Gesture Recognition", STAG: Smart Tools & Apps for Graphics, 2015

[2] A. Memo, P. Zanuttigh, "Head-mounted gesture controlled interface for human-computer interaction", Multimedia Tools and Applications, 2017

Download the full dataset used in the paper.

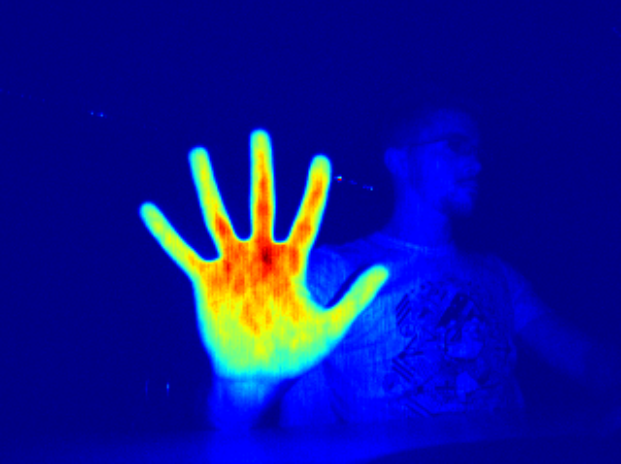

The dataset contains gestures performed by 4 different people, each performing 11 different gestures repeated 30 times each, for a total of 1320 samples. For each sample, color, depth and confidence frames are available. Intrinsic parameters for the Creative Senz3D are also provided.

Color

|

Depth

|

Confidence

|