Abstract

In this paper we propose a framework for the fusion of depth data produced by a Time-of-Flight (ToF) camera and stereo vision system. Initially, depth data acquired by the ToF camera are upsampled by an ad-hoc algorithm based on image segmentation and bilateral filtering. In parallel a dense disparity map is obtained using the Semi-Global Matching stereo algorithm. Reliable confidence measures are extracted for both the ToF and stereo depth data. In particular, ToF confidence also accounts for the mixed-pixel effect and the stereo confidence accounts for the relationship between the pointwise matching costs and the cost obtained by the semi-global optimization. Finally, the two depth maps are synergically fused by enforcing the local consistency of depth data accounting for the confidence of the two data sources at each location. Experimental results clearly show that the proposed method produces accurate high resolution depth maps and outperforms the compared fusion algorithms.

The full paper can be downloaded from here

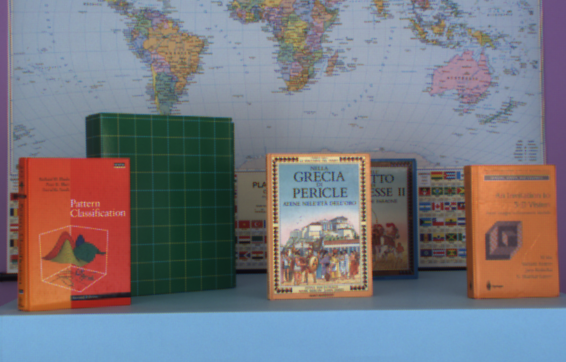

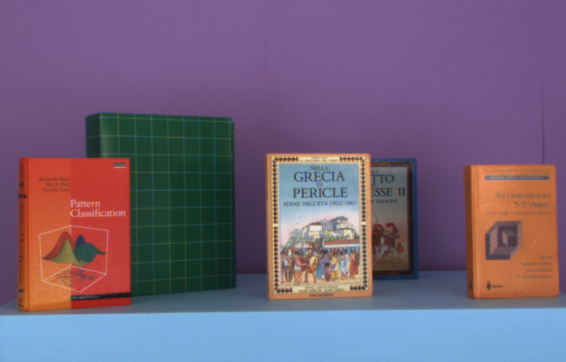

Experimental Results Dataset

The experimental results dataset has been obtained from [1]. The archive containing all the data used for the experimental results of [1] can be downloaded from here . The archive contains the following data folders:

- The folder "Input" contains the input data for the 5 scenes.

- The folder "Calibration_Parameters" contains all the calibration parameters for the employed acquisition setup.

- The folder "Ground Truth" contains the ground truth data computed with space time stereo.

- The folders "Results" , "Errors" and "Discontinuities" contains experimental data for [1] not related to this paper.

Results from this paper

This section contains additional data about the experimental results that was not possible to place in the paper due to the length constraints, in particular it contains:

- the confidence maps produced by the methods proposed in Sections 4 and 5 of the paper for both the ToF and the stereo system [3] on the considered dataset;

- the disparity maps produced by the ToF upsampling, by the stereo vision system, by the proposed fusion algorithm and the ones produced by the other methods compared in Section 7 of the paper ([1,2,4,5])

- the error maps of each method, both in terms of absolute differences and of mean squared error.

Contents

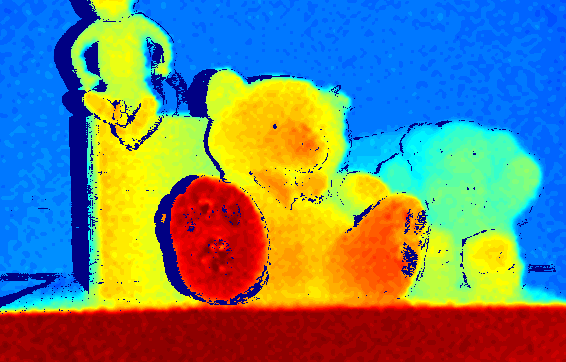

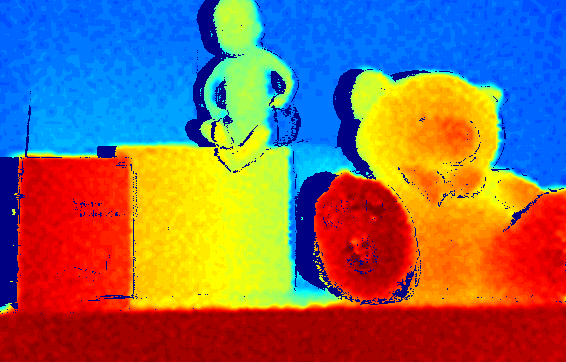

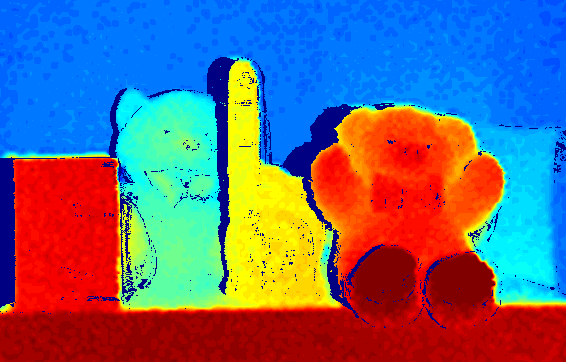

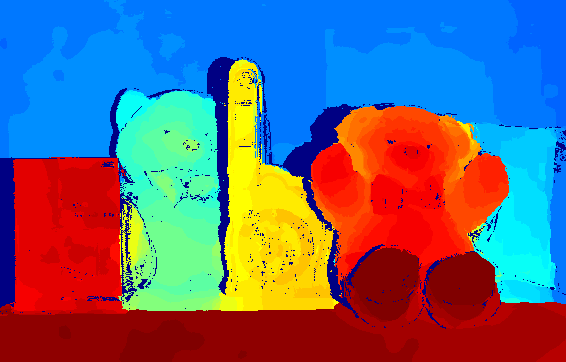

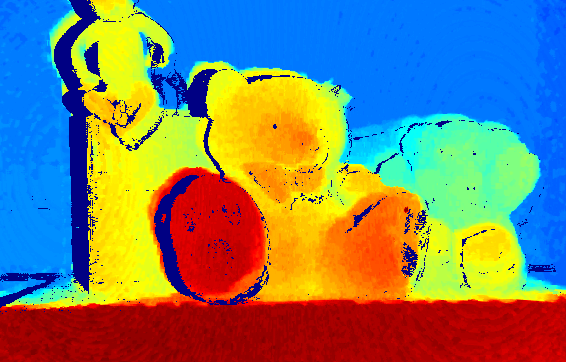

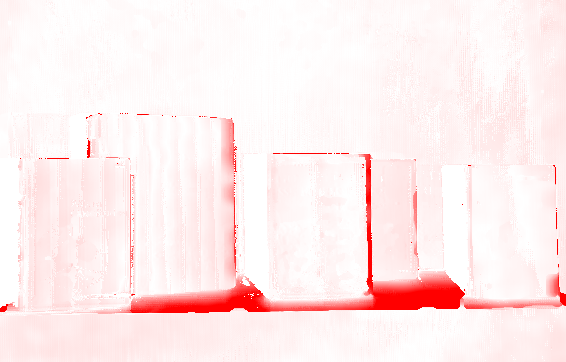

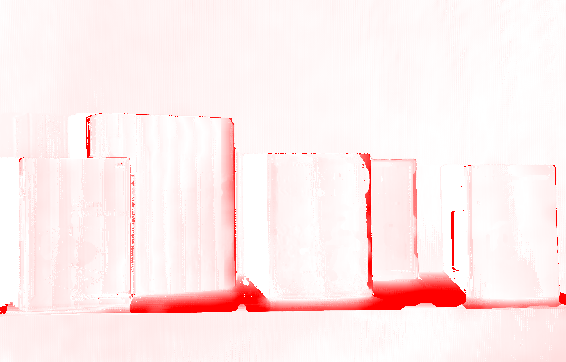

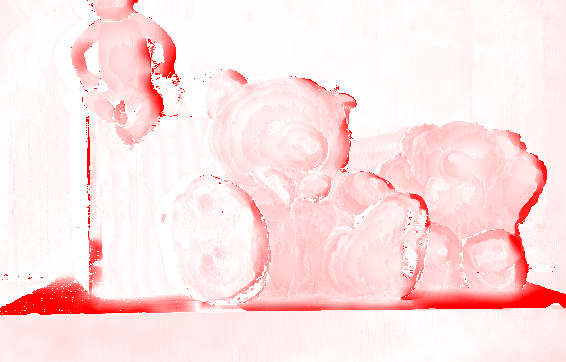

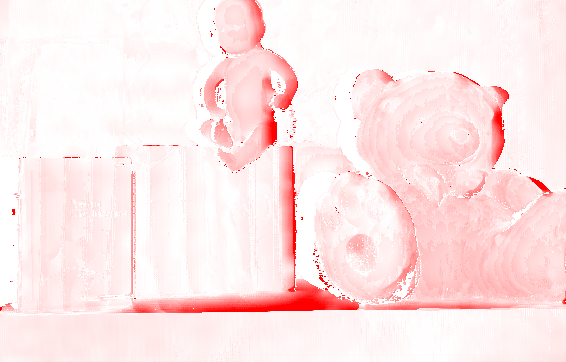

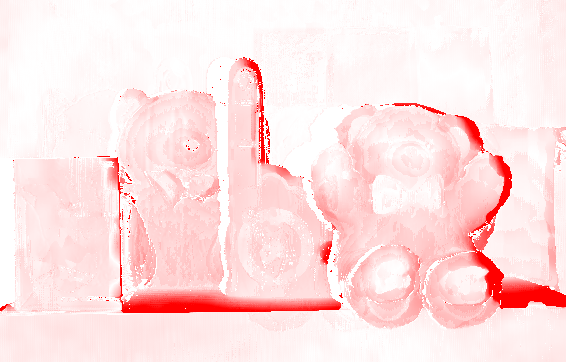

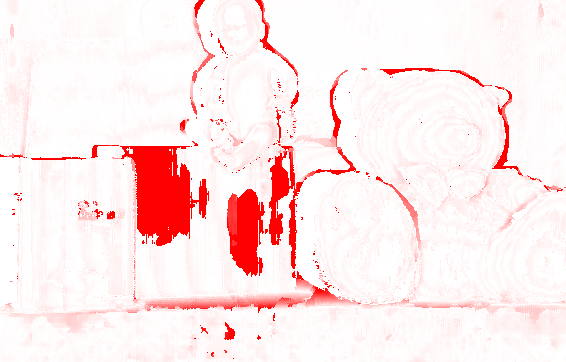

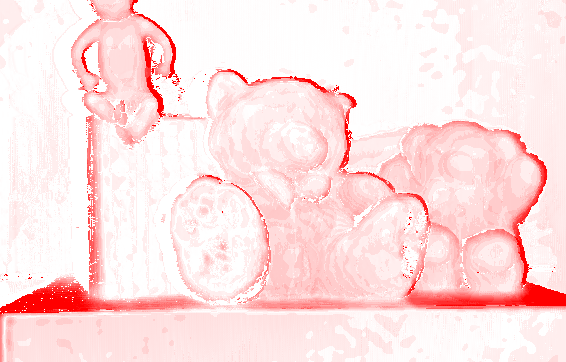

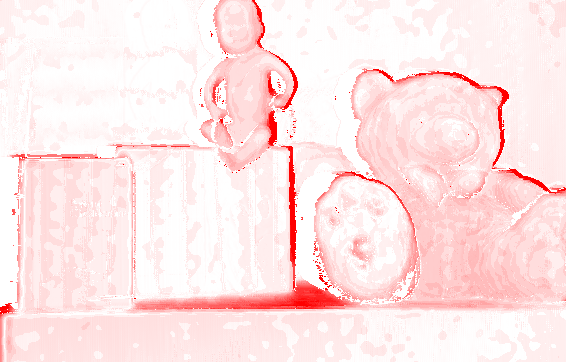

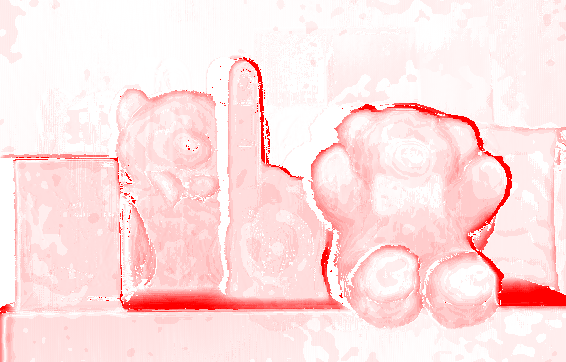

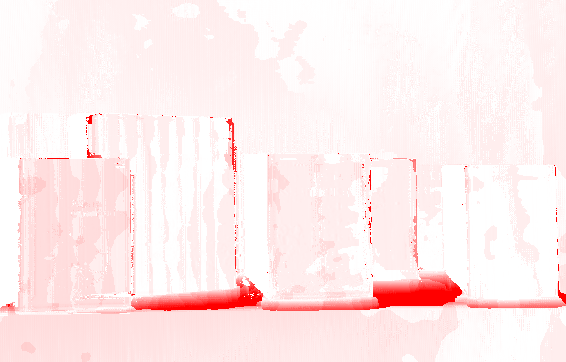

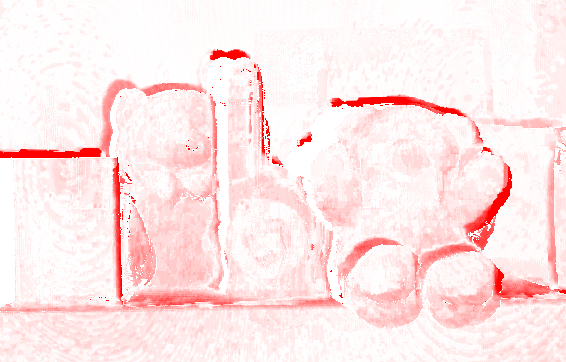

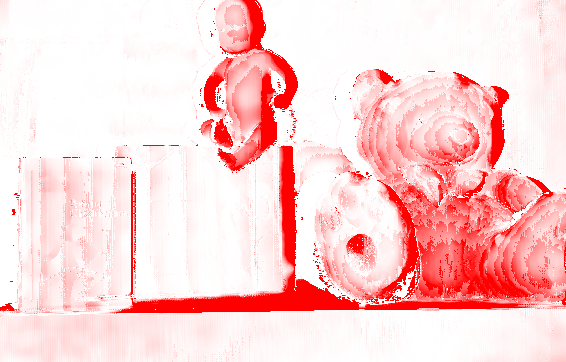

1 Confidence maps

For each scene of the dataset that we used for the comparison, we show the confidence maps associated to ToF and stereo data. The first column shows the reference color images, the second, third and fourth columns show the confidence maps associated to the ToF and the last column shows the confidence maps of stereo data. For ToF data, we show the confidence from amplitude and intensity values PAI, the confidence from local variance PLV and their product PT = PAIPLV . The last column shows the confidence of the stereo system, i.e., PS. As shown in the color map below, dark values correspond to low confidence and bright values correspond to higher confidence values.

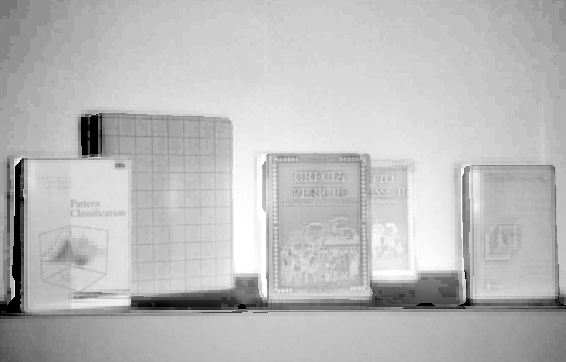

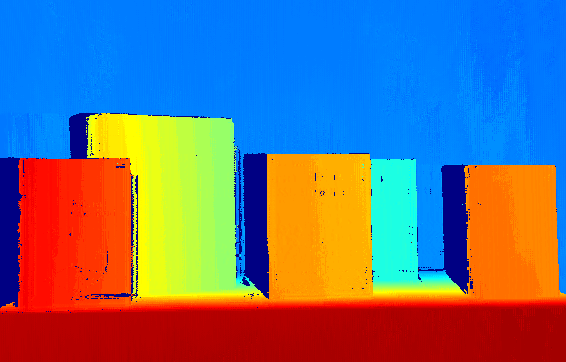

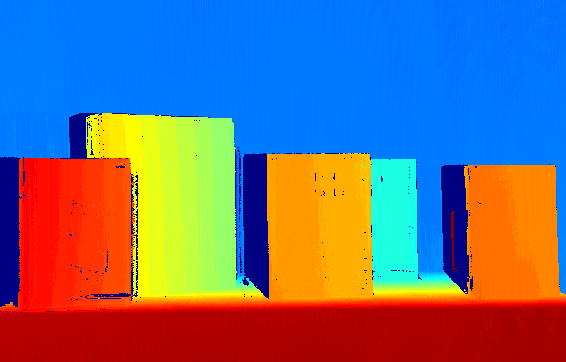

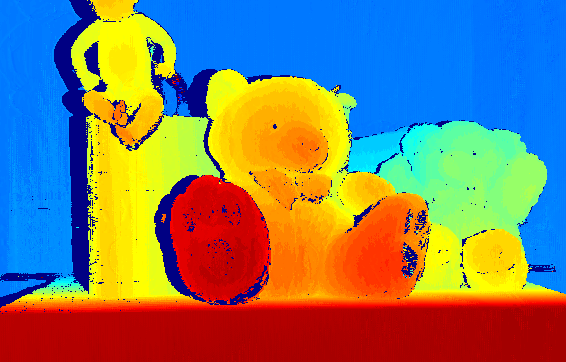

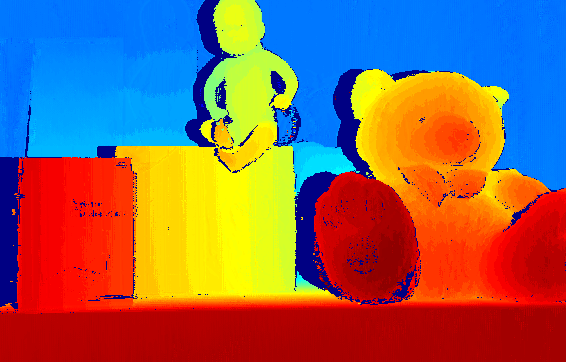

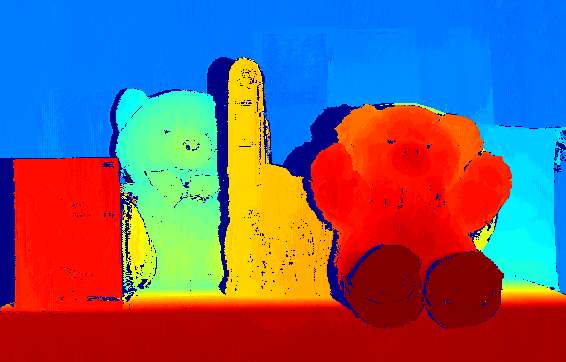

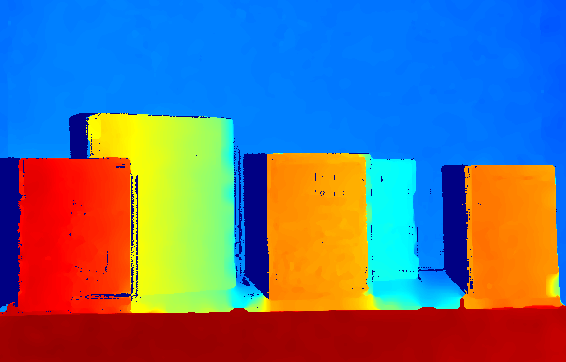

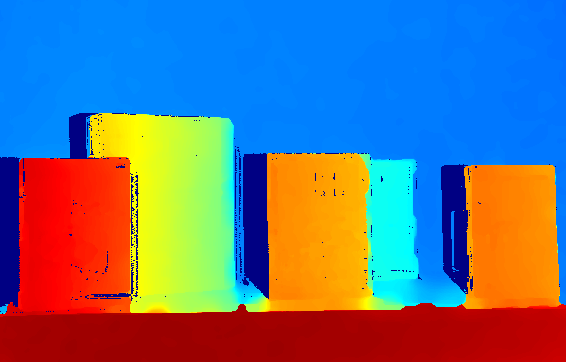

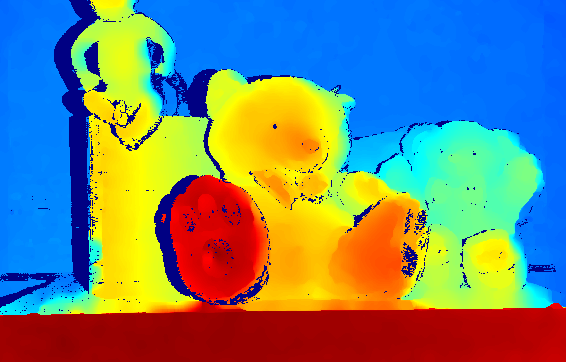

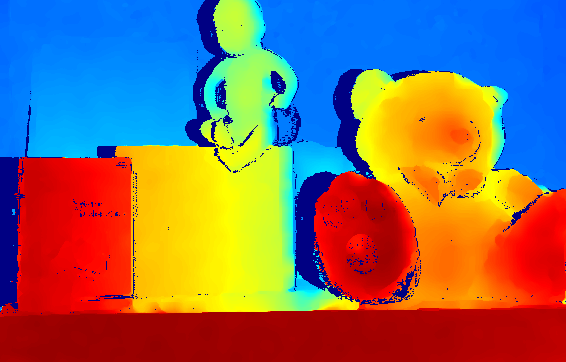

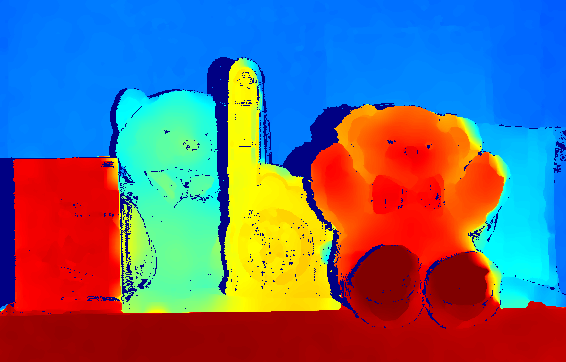

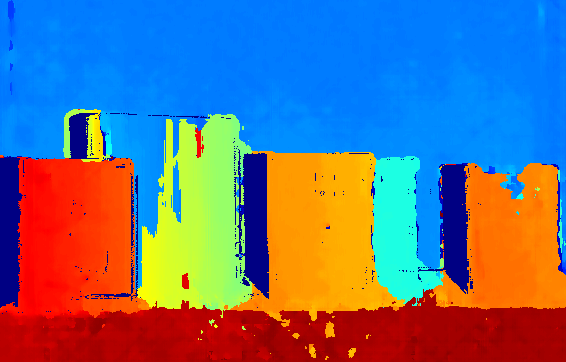

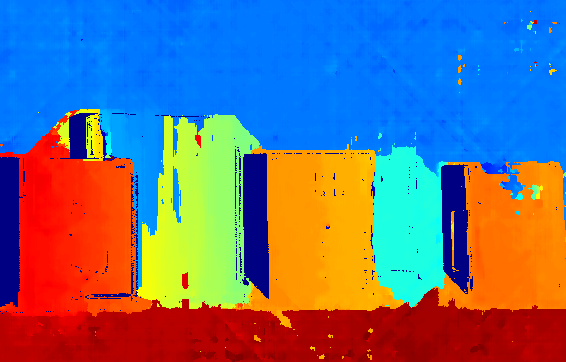

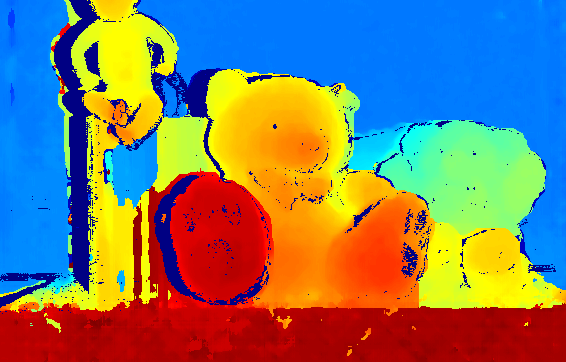

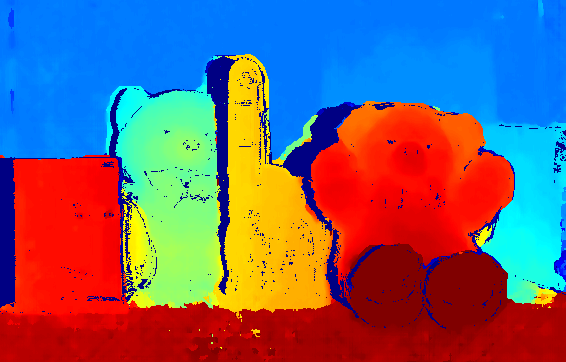

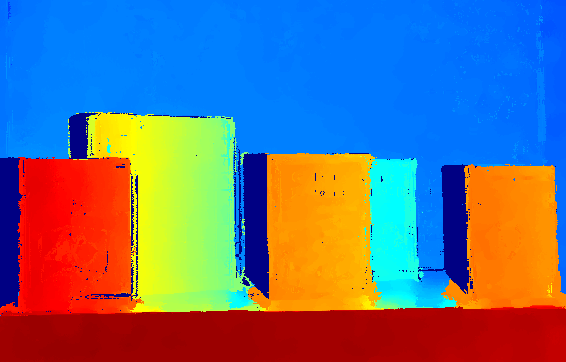

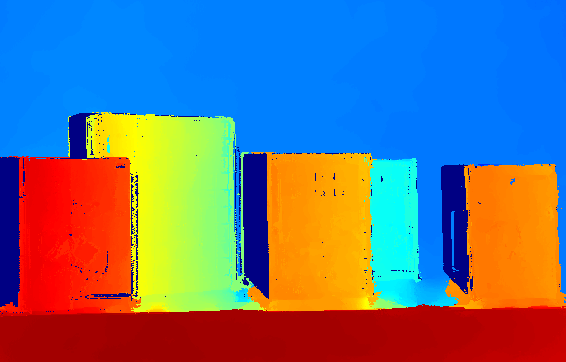

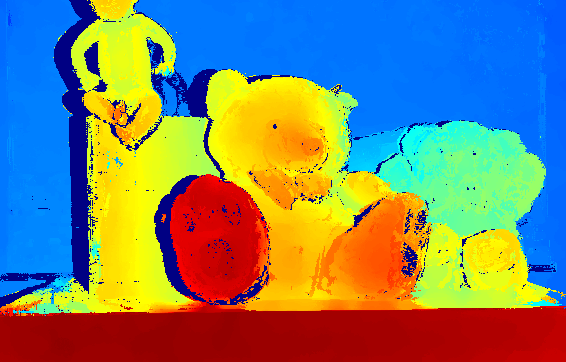

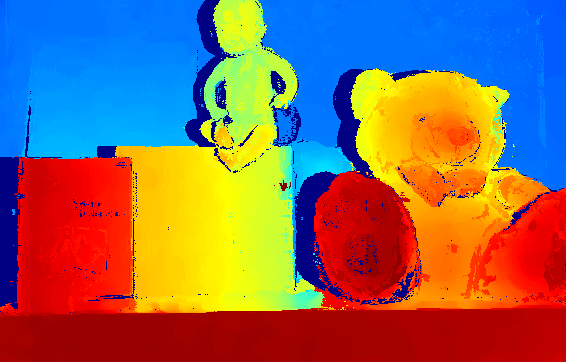

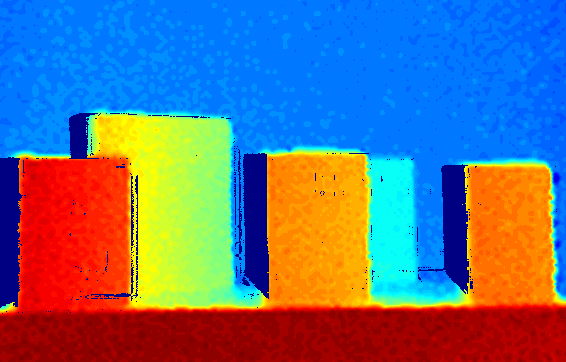

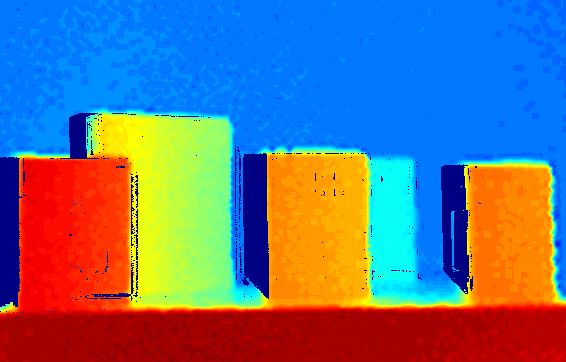

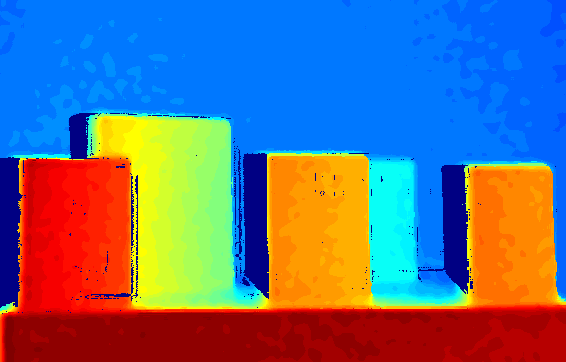

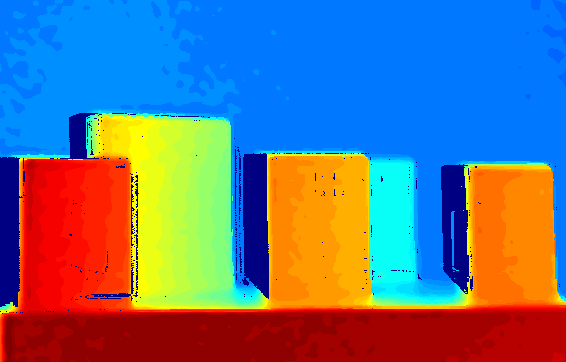

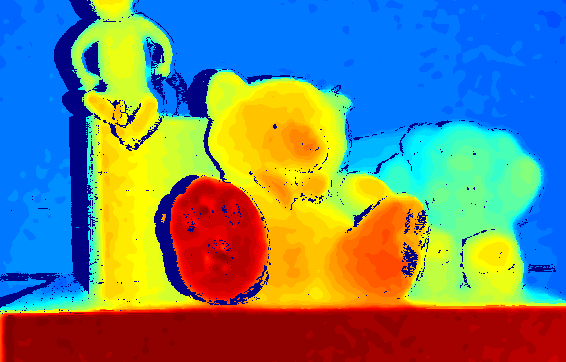

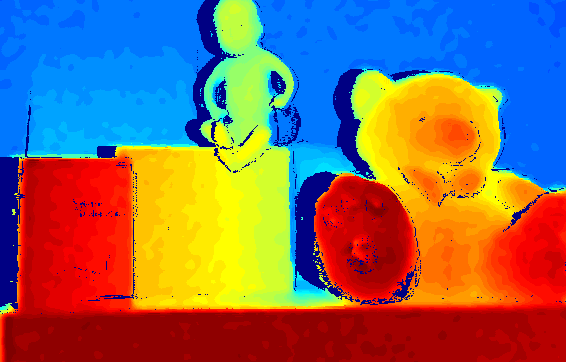

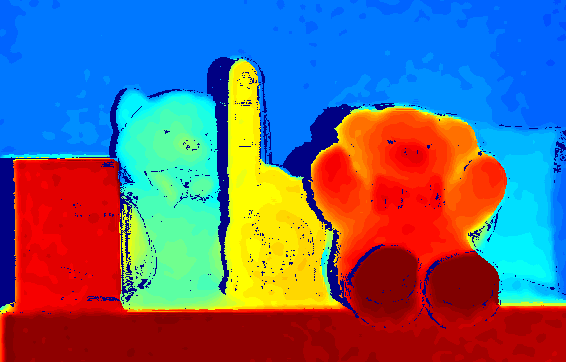

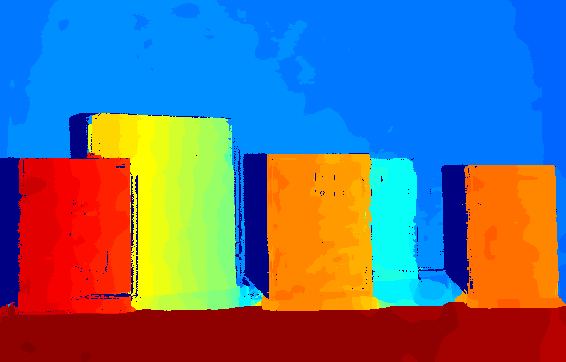

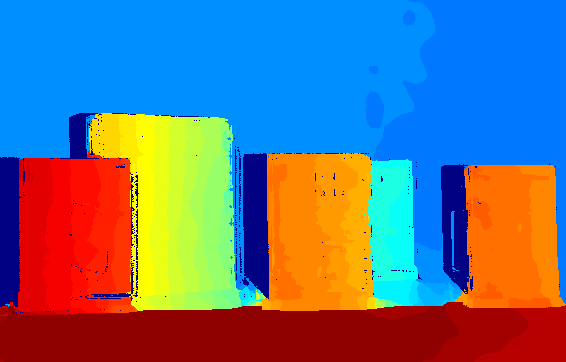

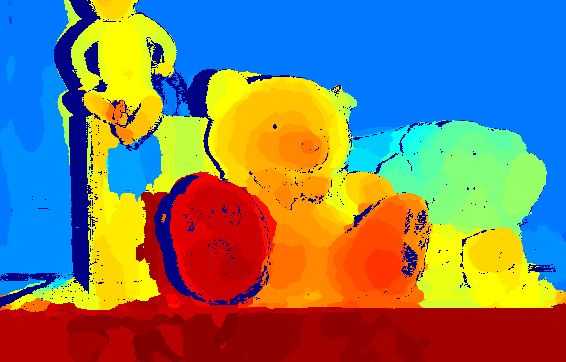

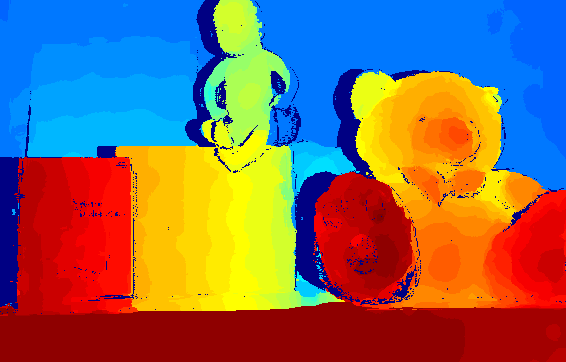

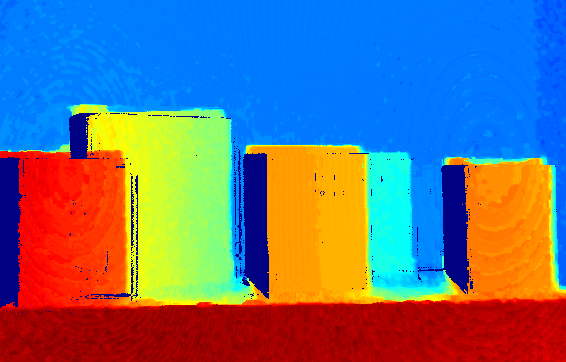

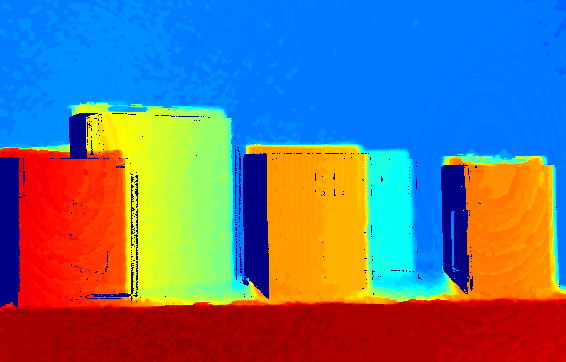

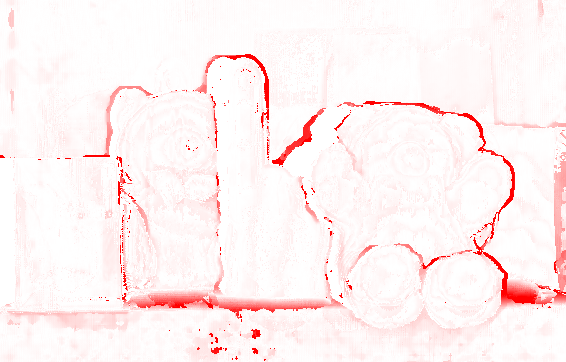

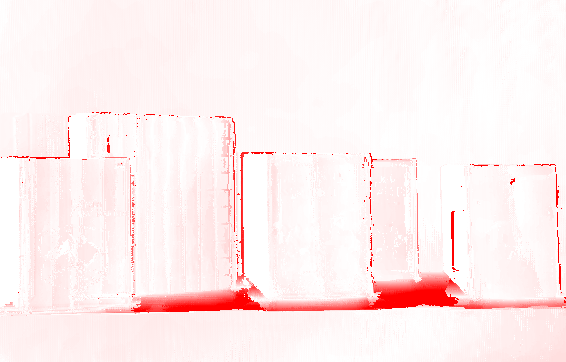

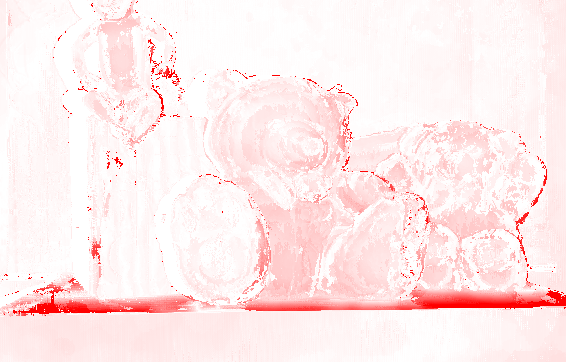

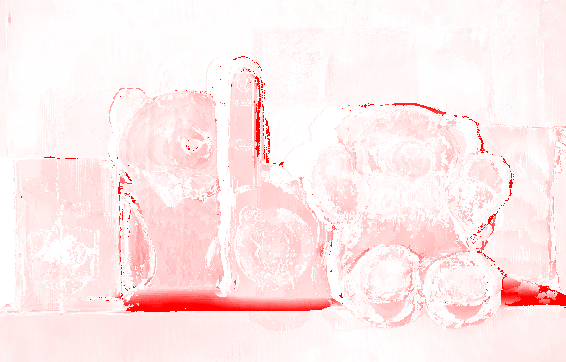

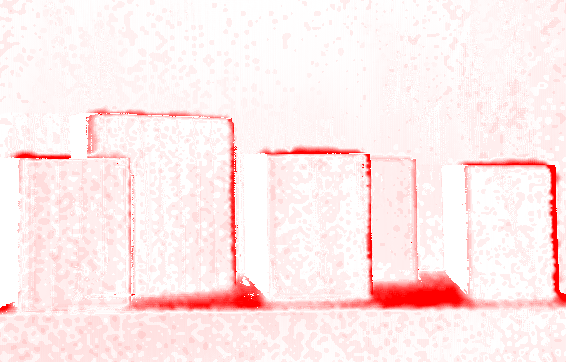

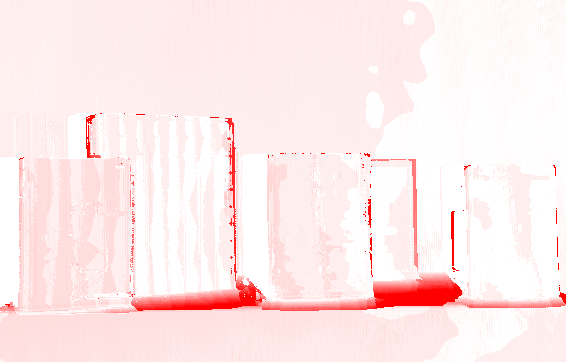

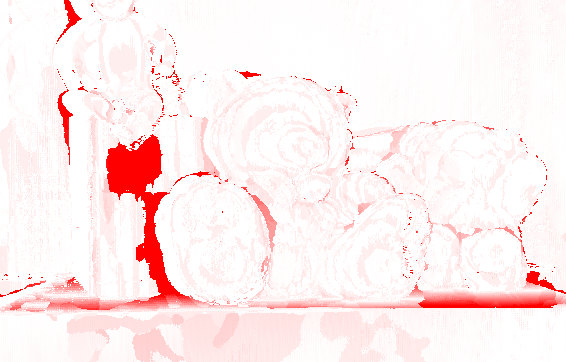

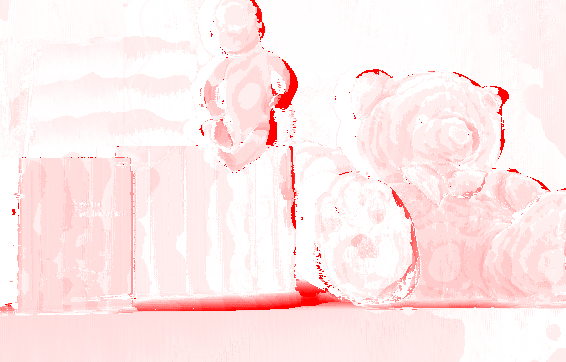

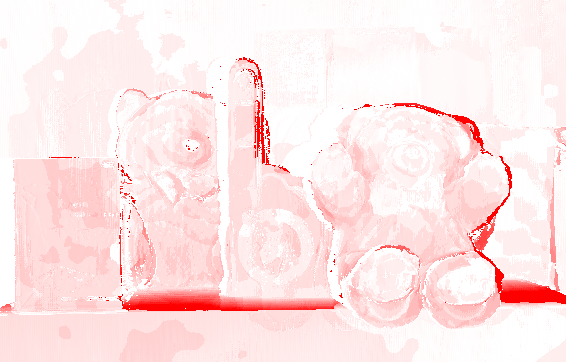

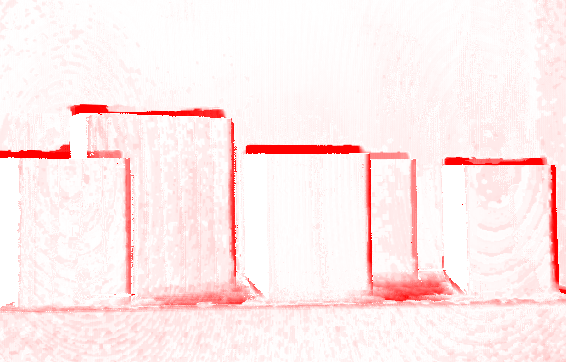

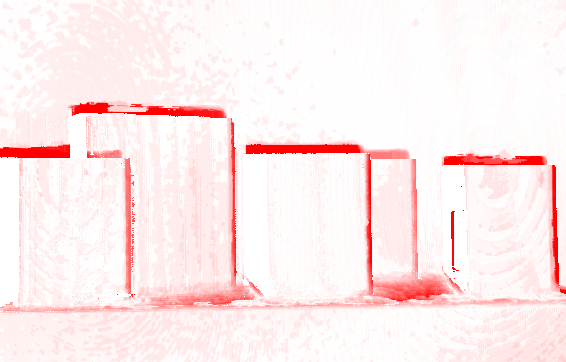

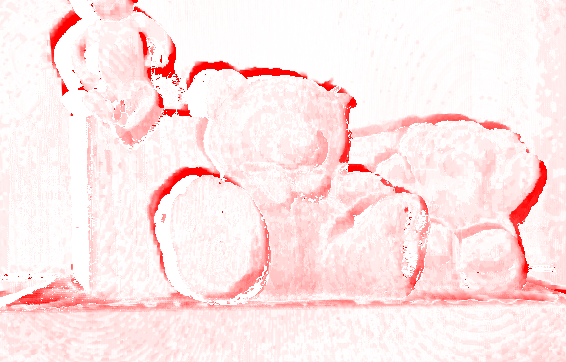

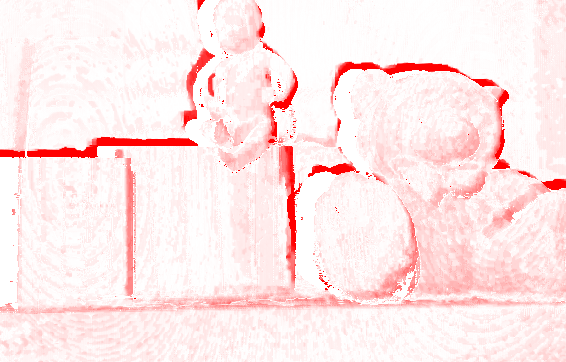

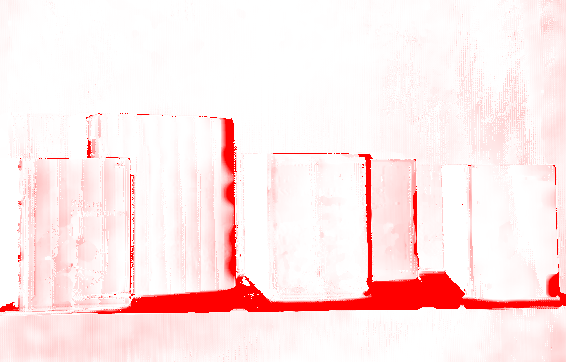

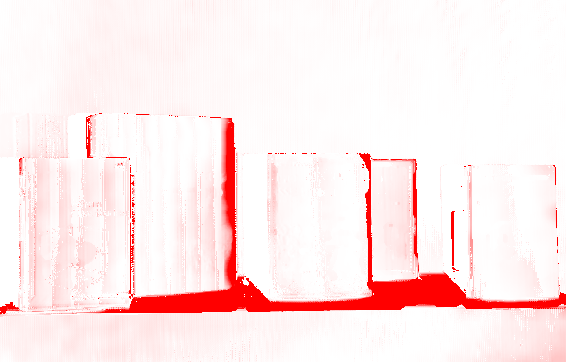

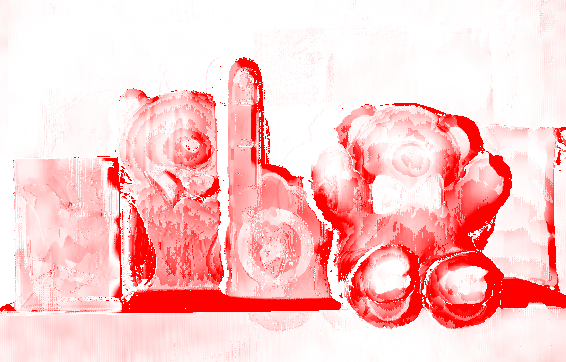

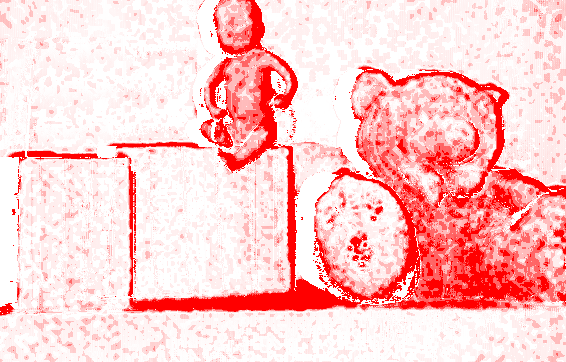

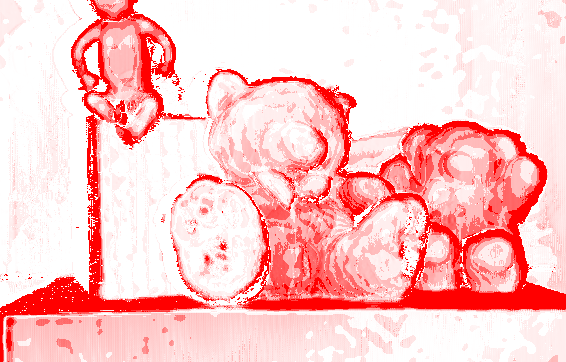

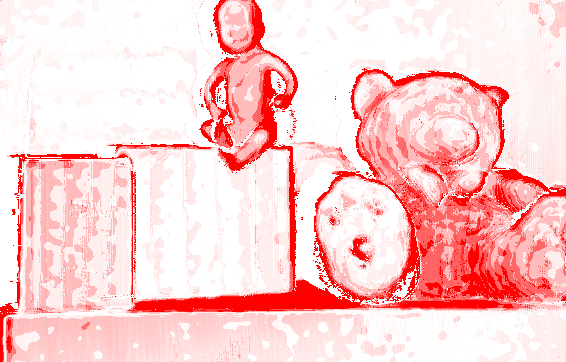

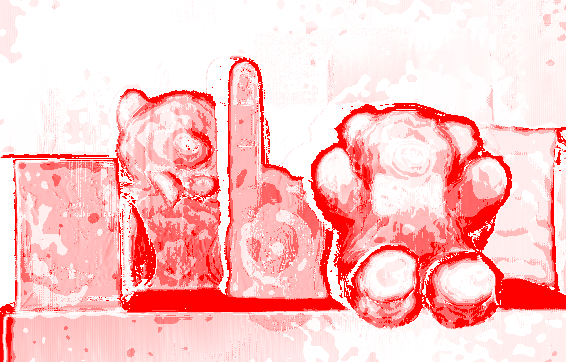

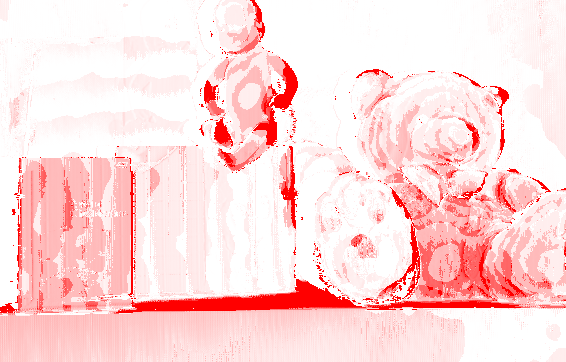

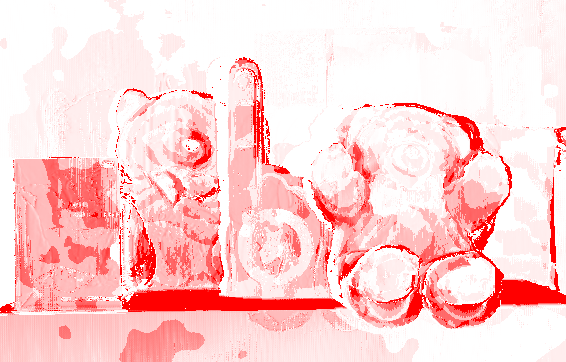

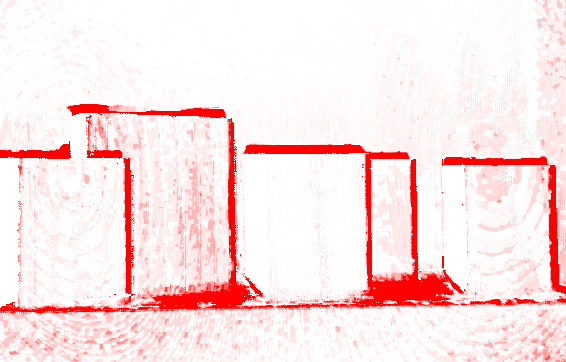

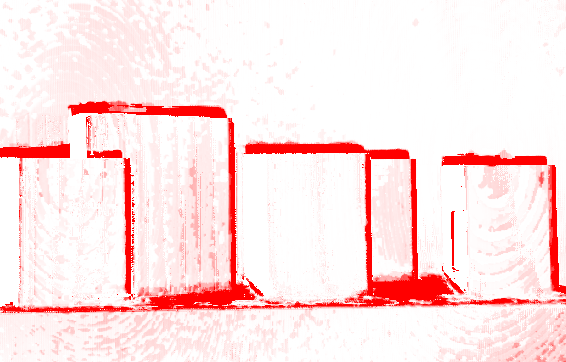

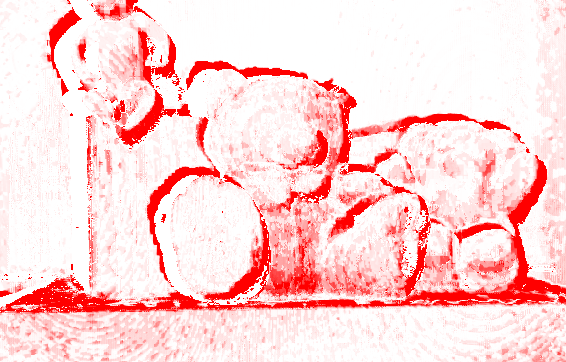

2 Disparity maps

The next table shows a comparison of the disparity maps produced by all the competing algorithms, as well as the intermediate steps of our proposed method, i.e., the upsampled and interpolated map from ToF data and the disparity computed with the SGM [3] algorithm for the stereo system. The colors represent the disparity values ranging from 60 (blue) to 110 (red) pixels. Dark blue points are the ones for which disparity values are not available.

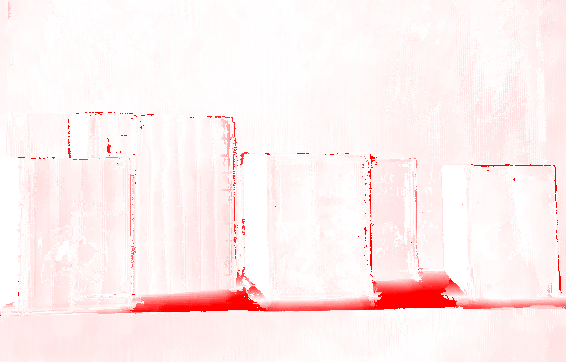

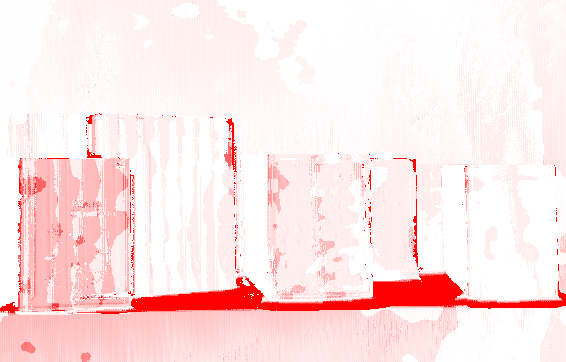

3 Error maps

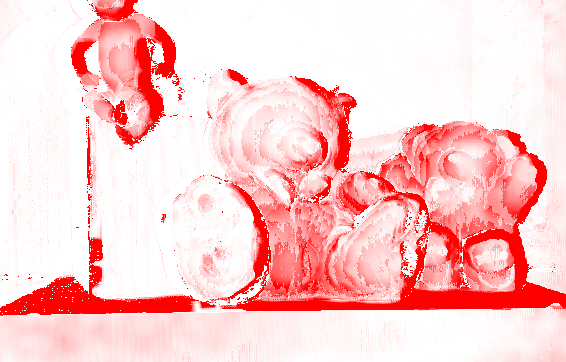

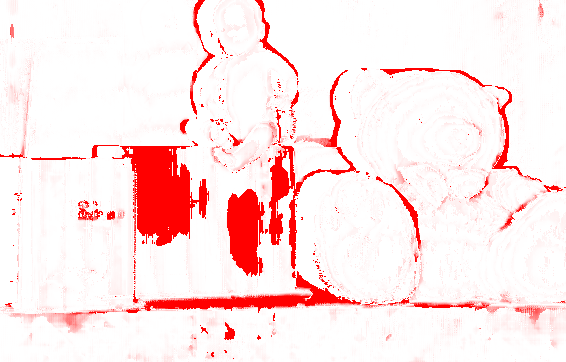

3.1 Absolute difference

The images show the absolute difference between the output disparity maps and the ground truth, i.e., |Di - DGT |, where Di is the considered disparity map i for the evaluation, and DGT is the ground truth.

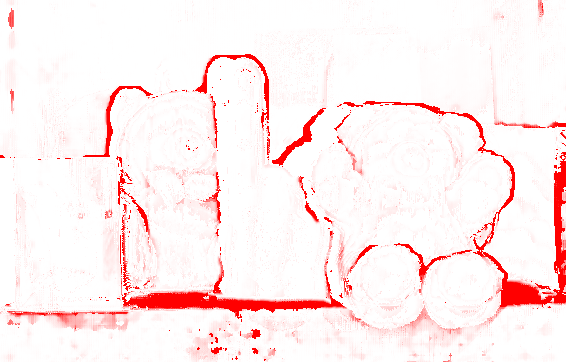

3.2 Mean squared error

The images show the mean squared error between the output disparity maps and the ground truth, i.e., |Di - DGT |2 , where Di is the considered disparity map i for the evaluation, and DGT is the ground truth. With respect to the absolute difference, the MSE penalizes more larger errors and less errors smaller than 1 pixel.

Contacts

For any information you can write to lttm@dei.unipd.it . Have a look at our website http://lttm.dei.unipd.it for other works and datasets on this topic.

References

[1] C. Dal Mutto, P. Zanuttigh, G.M. Cortelazzo, "Probabilistic ToF and Stereo Data Fusion Based on Mixed Pixels Measurement Models", IEEE Transaction on Pattern Analysis and Machine Intelligence, 2015.

[2] C. Dal Mutto, P. Zanuttigh, S. Mattoccia, and G.M. Cortelazzo. Locally consistent tof and stereo data fusion. In ECCV Workshop on Consumer Depth Cameras for Computer Vision, pages 598–607. Springer, 2012.

[3] Heiko Hirschmuller. Stereo processing by semiglobal matching and mutual information. Pattern Analysis and Machine Intelligence, IEEE Transactions on, 2008.

[4] Q. Yang, R. Yang, J. Davis, and D. Nister. Spatial-depth super resolution for range images. In Computer Vision and Pattern Recognition, 2007. CVPR ’07. IEEE Conference on, pages 1–8, 2007.

[5] J. Zhu, L. Wang, R. Yang, and J. Davis. Fusion of time-of-flight depth and stereo for high accuracy depth maps. In Computer Vision and Pattern Recognition, 2008. CVPR 2008. IEEE Conference on, 2008.

[6] G. Marin, P. Zanuttigh, S. Mattoccia, "Reliable Fusion of ToF and Stereo Depth Driven by Confidence Measures" , accepted at ECCV 2016

xhtml/css website layout by Ben Goldman - http://realalibi.com