Paper

Time-of-Flight (ToF) sensors and stereo vision systems are both capable of acquiring depth information but they have complementary characteristics and issues. A more accurate representation of the scene geometry can be obtained by fusing the two depth sources. In this paper we present a novel framework for data fusion where the contribution of the two depth sources is controlled by confidence measures that are jointly estimated using a Convolutional Neural Network. The two depth sources are fused enforcing the local consistency of depth data, taking into account the estimated confidence information. The deep network is trained using a synthetic dataset and we show how the classifier is able to generalize to different data, obtaining reliable estimations not only on synthetic data but also on real world scenes. Experimental results show that the proposed approach increases the accuracy of the depth estimation on both synthetic and real data and that it is able to outperform state-of-the-art methods.

The full paper can be downloaded from here

Dataset

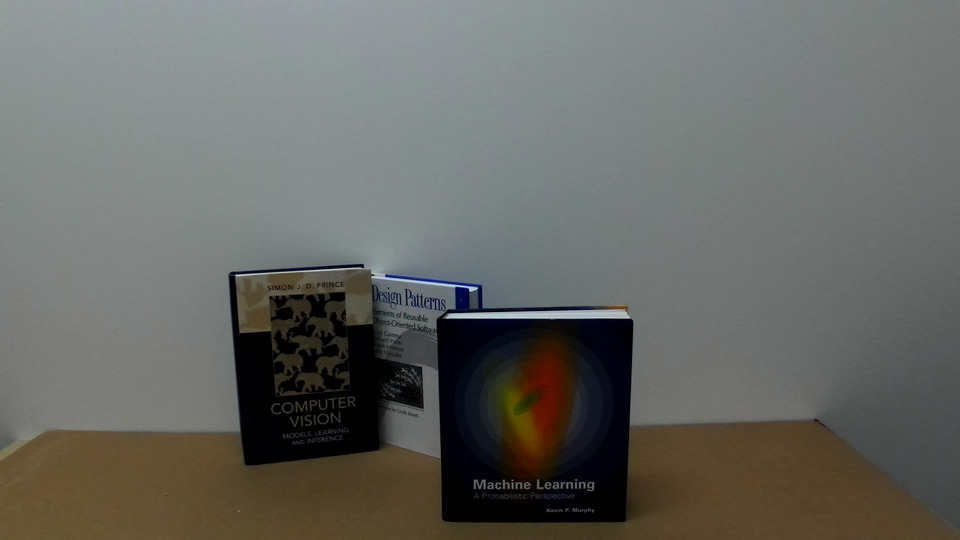

The experimental evaluation of the paper has been performed using 3 different datasets:

- The SYNTH3 synthetic dataset, that can be downloaded from here

- The REAL3 dataset, that can be downloaded from this webpage

- The LTTM5 dataset, that can be downloaded from here

|

|

|

|

|

|

|

|

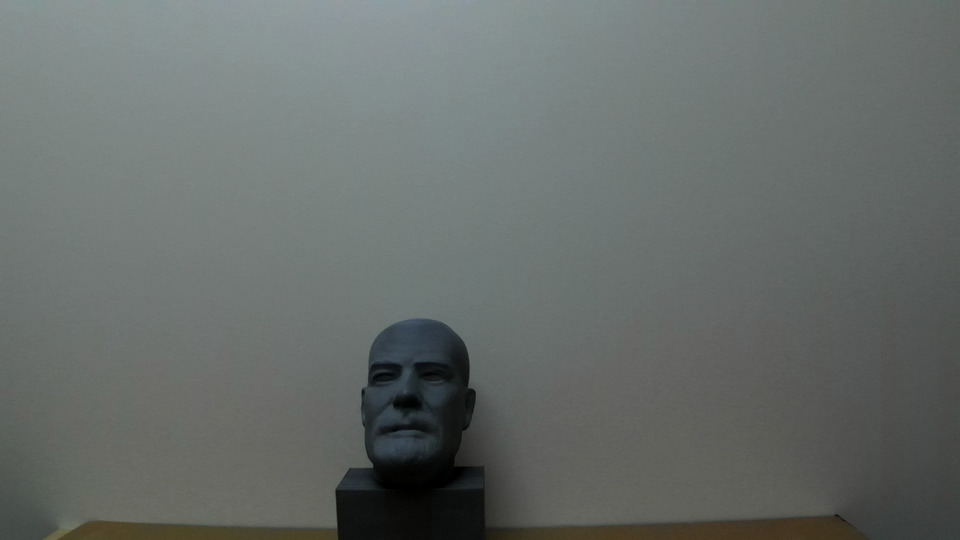

A zipped archive with the dataset can be downloaded from here. It contains one folder for each of the 8 scenes containing the following data:

- The rectified left color image from the ZED stereo camera (zed_left.png)

- The rectified right color image from the ZED stereo camera (zed_right.png)

- The Kinect v2 ToF depth map (kinect_depth.png)

- The Kinect v2 ToF amplitude (kinect_amplitude.png)

- The ground truth disparity map (gt_depth.png)

In order to store the floating point data of amplitude and depth into PNG images we used a custom representation where a 4 channel png is used to store the 32 bit representation. The imread32f.m file contains a simple MATLAB script for loading data from the amplitude, depth and ground truth files.

Finally the calibrationREAL.xml file contains the intrinsic and extrinsic parameters of the employed setup. The format of the calibration data is the one used by the OpenCV computer vision library, refer to the documentation of OpenCV for more details.

Downloads

- Real3 dataset

- Webpage for the SYNTH3 dataset

- Webpage for the LTTM5 LTTM5 dataset

At the address http://lttm.dei.unipd.it/nuovo/datasets.html you can find other ToF and stereo datasets from our research group.

Contacts

For any information on the data you can write to lttm@dei.unipd.it . Have a look at our website http://lttm.dei.unipd.it for other works and datasets on this topic.

References

[1] G. Agresti, L. Minto, G. Marin and P. Zanuttigh, "Stereo and ToF Data Fusion by Learning from Synthetic Data", submitted to Elsevier Fusion Journal (under review).[2] G. Agresti, L. Minto, G. Marin and P. Zanuttigh, "Deep Learning for Confidence Information in Stereo and ToF Data Fusion", 3D Reconstruction meets Semantics ICCV Workshop, 2017.

xhtml/css website layout by Ben Goldman - http://realalibi.com