|

|

--

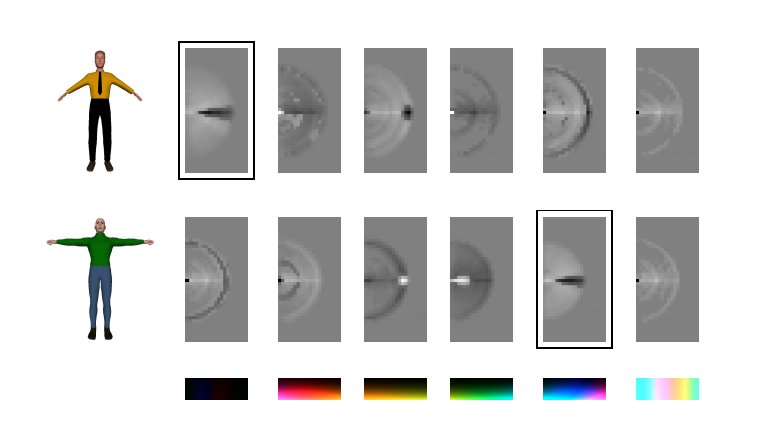

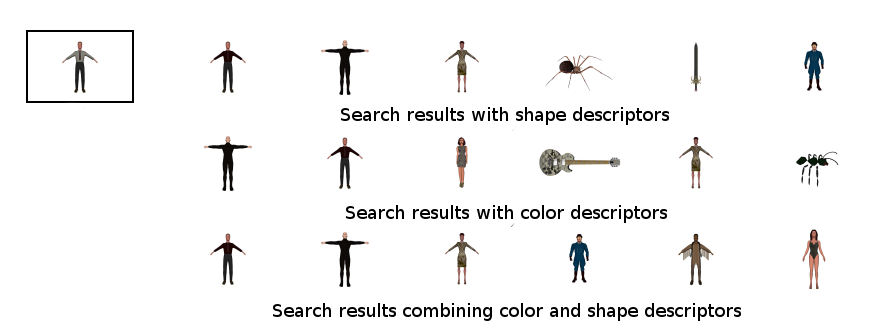

Current content-based retrieval schemes for 3D models are based on

shape information only and typically ignore other clues like color

data associated to their description. Combining shape and color

clues can potentially improve 3D model retrieval performances but

this idea is still almost unexplored at this time. A possible

approach is to extend shape-based 3D model retrieval methods of

proven effectiveness in order to include color data. Following such

rationale we have introduced an extended version of the spin-image

descriptor that can account also for color data [1]. The comparison

of such descriptors is performed using a novel scheme that allows to

recognize as similar also objects with different colors but

distributed in the same way over the shape. Shape and color

similarity are finally combined together by an algorithm based on

fuzzy logic. Experimental results show how the joint use of color

and shape data allows to improve retrieval performances specially on

object classes with meaningful color information.

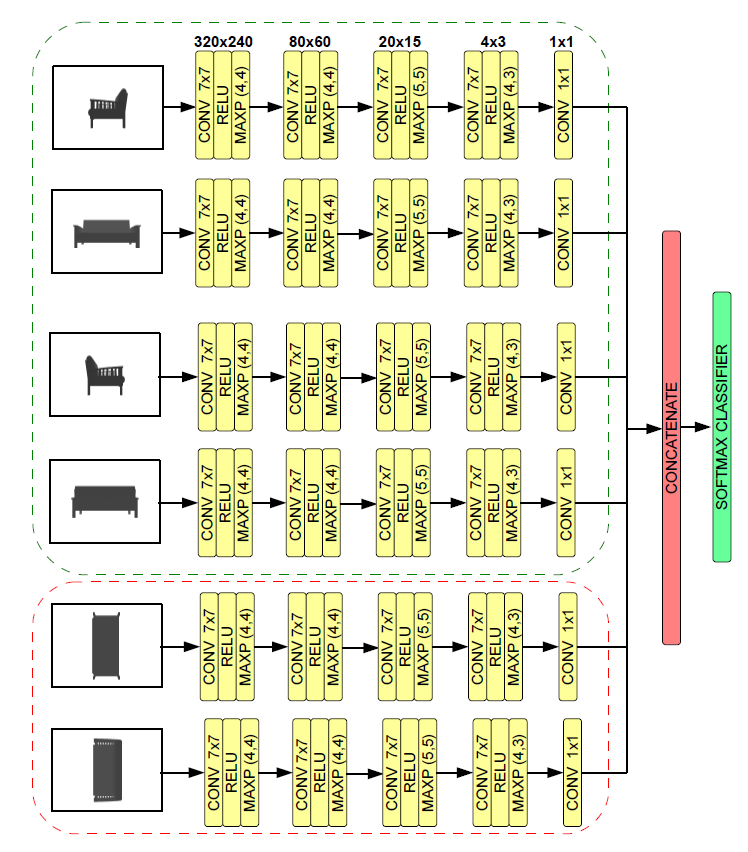

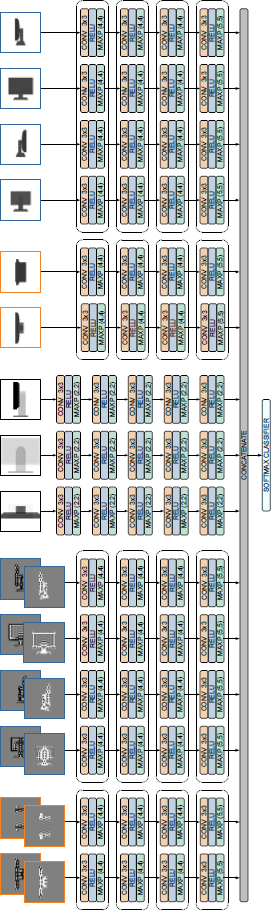

In the first version [2] the algorithm starts by extracting a set of depth maps by rendering the input 3D shape from different viewpoints. Then the depth maps are fed to a multi-branch Convolutional Neural Network (CNN). Each branch of the network takes in input one of the depth maps and produces a classification vector by using 5 convolutional layers of progressively reduced resolution. The various classification vectors are finally fed to a linear classifier that combines the outputs of the various branches and produces the final classification. Experimental results on the Princeton ModelNet database show how the proposed approach allows to obtain a high classification accuracy. A more refined version [3] exploits also surface and volumetric clues. It uses three different data representations: 1) a set of depth maps obtained by rendering the 3D object as in [2]; 2) a novel volumetric representation obtained by counting the number of filled voxels along each direction 3) NURBS surface curvature parameters. All the three data representations are fed to a multi-branch Convolutional Neural Network where each branch processes a different data source. The extracted feature vectors are fed to a linear classifier that combines the outputs in order to get the final predictions.

Related papers:

[3] L. Minto ,P. Zanuttigh, G. Pagnutti

|