Abstract

Depth maps acquired with ToF cameras have a limited accuracy due to the high noise level and to the multi-path interference. Deep networks can be used for refining ToF depth, but their training requires real world acquisitions with ground truth, which is complex and expensive to collect. A possible workaround is to train networks on synthetic data, but the domain shift between the real and synthetic data reduces the performances. In this paper, we propose three approaches to perform unsupervised domain adaptation of a depth denoising network from synthetic to real data. These approaches are respectively acting at the input, at the feature and at the output level of the network. The first approach uses domain translation networks to transform labeled synthetic ToF data into a representation closer to real data, that is then used to train the denoiser. The second approach tries to align the network internal features related to synthetic and real data. The third approach uses an adversarial loss, implemented with a discriminator trained to recognize the ground truth statistic, to train the denoiser on unlabeled real data. Experimental results show that the considered approaches are able to outperform other state-of-the-art techniques and achieve superior denoising performances.

The paper can be downlaoded here.

Time-of-Flight Datasets

For the training and evaluation of the proposed ToF denoising method, we used five datasets, called "S1", "S2", "S3", "S4" and "S5" in the paper.

The real world datasets "S2", "S3" and "S5" have been introduced in [1]. They have been used for the training and the evaluation of the proposed Domain Adapted CNN for ToF data denoising.The synthetic dataset "S1" and the real world one "S4" have instead been introduced in [2] and can be downloaded from here .

Table 1 summarizes the various datasets exploited in the paper and provides the links to download them.

| Dataset | Type | GT | # scenes | Used for |

| S1 | Synthetic | Yes | 40 | Supervised training |

| S2 | Real | No | 97 | Adversarial training |

| S3 | Real | Yes | 8 | Validation |

| S4 | Real | Yes | 8 | Testing |

| S5 | Real | Yes | 8 | Testing |

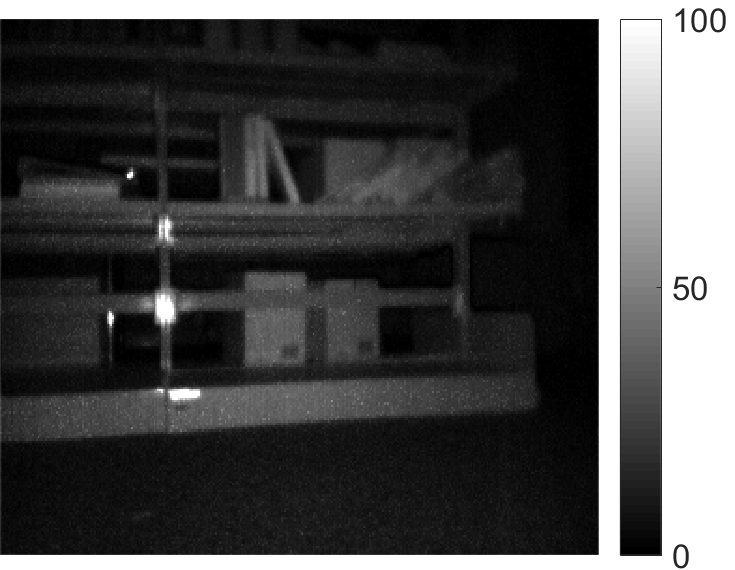

- the archive "S2.zip" contains the depth, amplitude and intensity maps (".mat" files) captured with the ToF camera at 10, 20, 30, 40, 50 and 60 MHz on the 97 scenes contained in the " S2" real dataset. The ToF data are recorded in an office environment with uncontrolled light condition. This dataset was used for the unsupervised training of the proposed Domain Adapted CNN for ToF data denoising. Notice that no ground truth information is provided for this dataset (it is used for unsupervised adaptation).

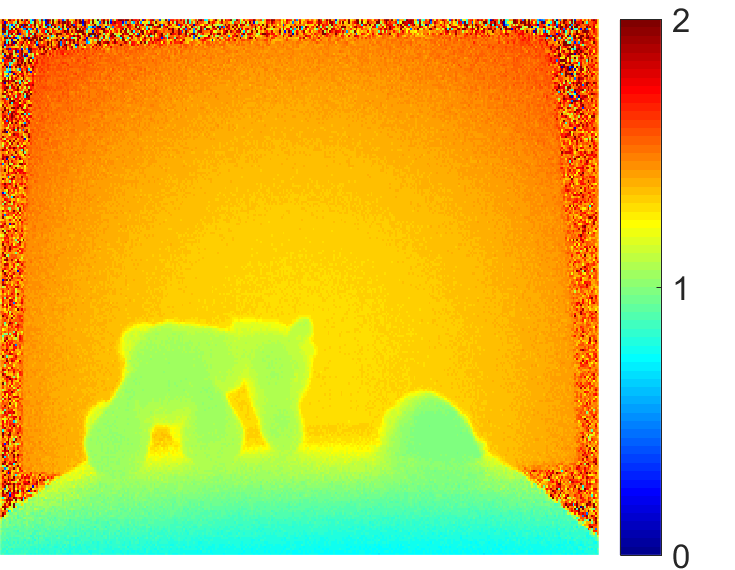

- the archize "S3.zip" containg the depth, amplitude and intensity maps (".mat" files) captured with the ToF camera at 10, 20, 30, 40, 50 and 60 MHz on the 8 scenes contained in the S3 real validation set. The ToF data are recorded in a laboratory with no external illumination. This dataset is provided with the depth ground truth and it is used for validation purposes in the training process.

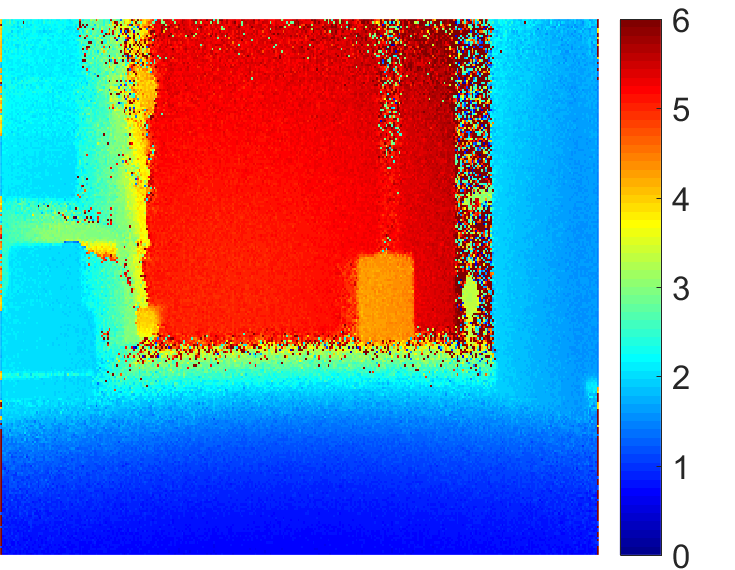

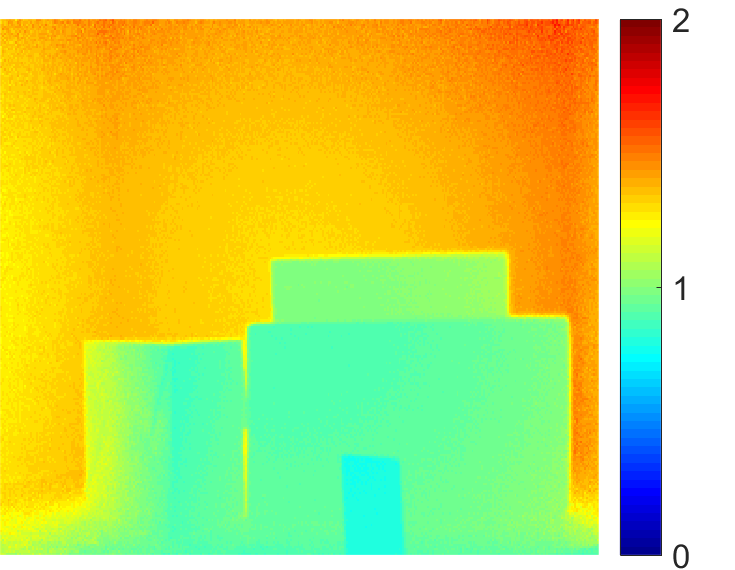

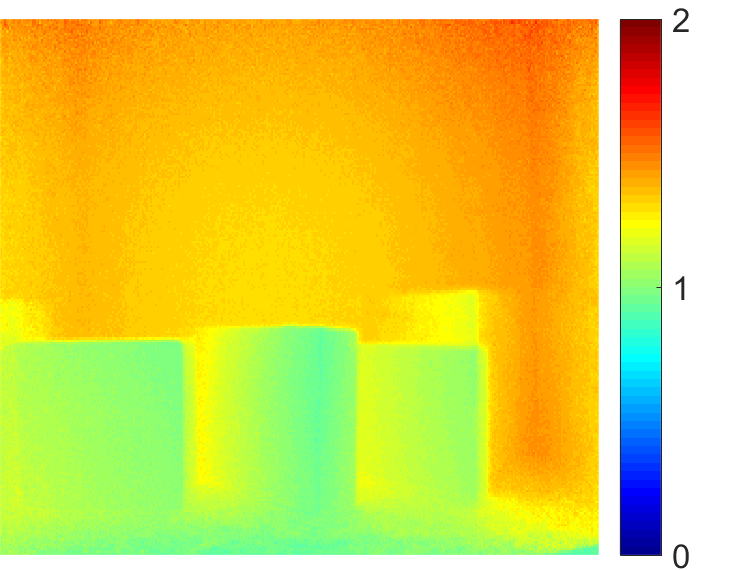

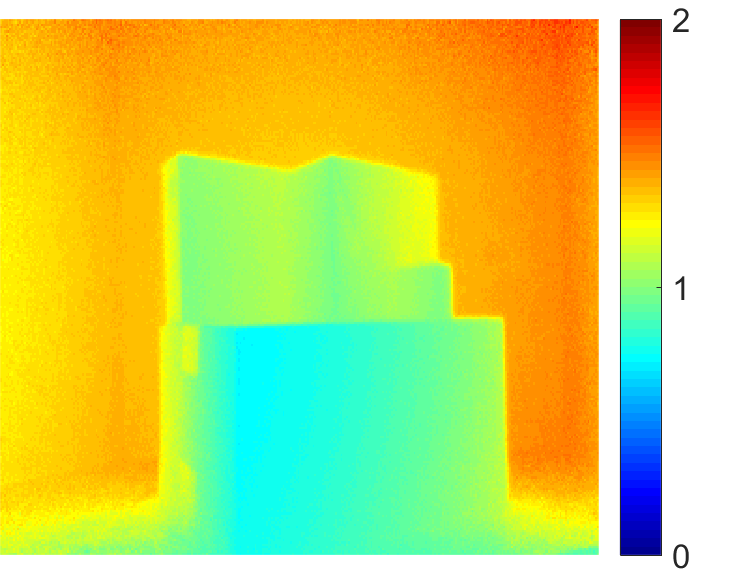

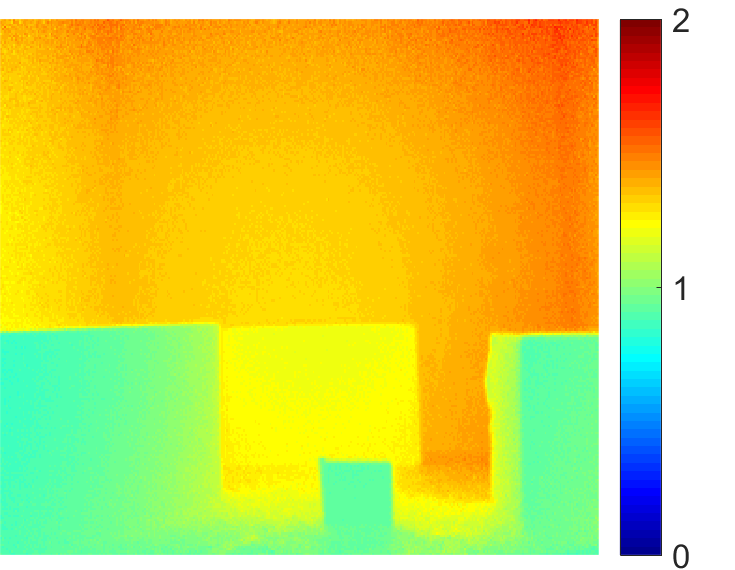

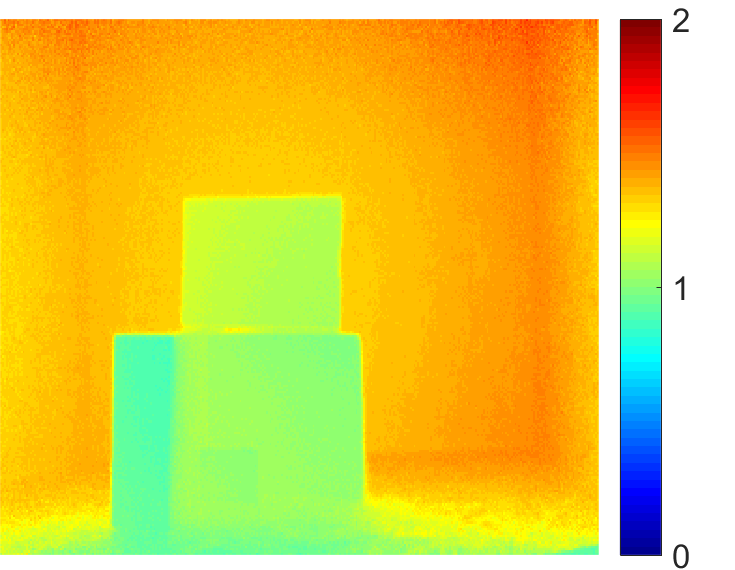

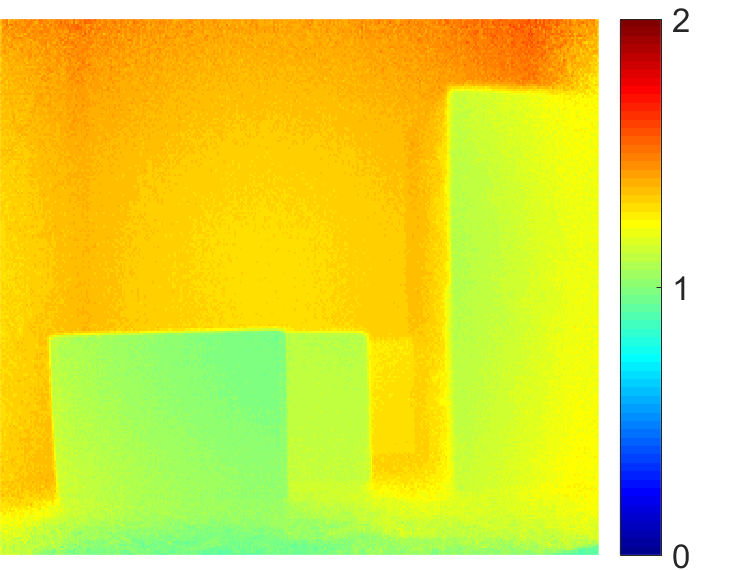

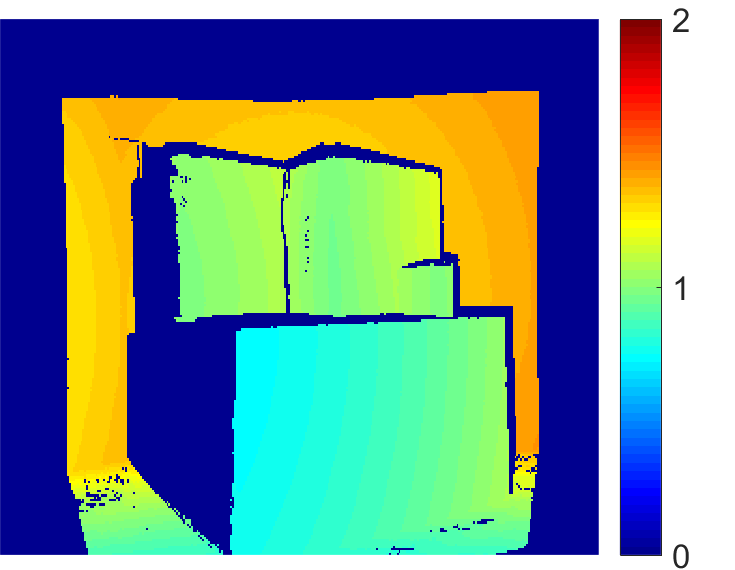

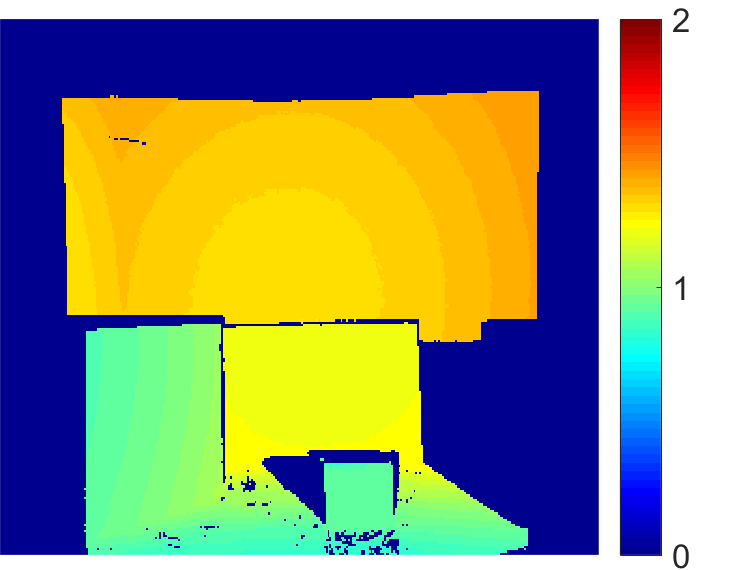

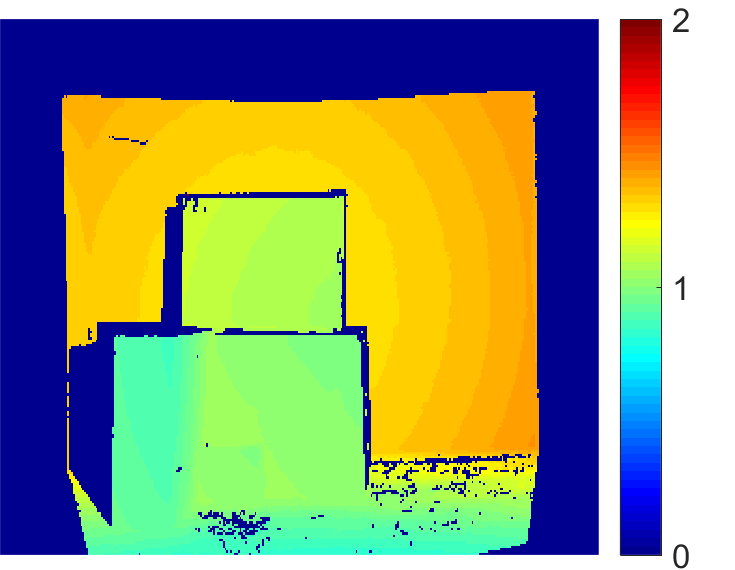

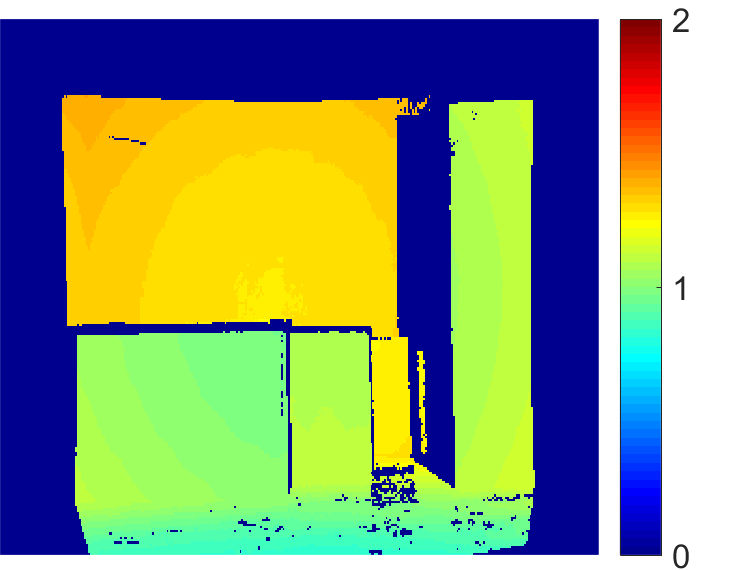

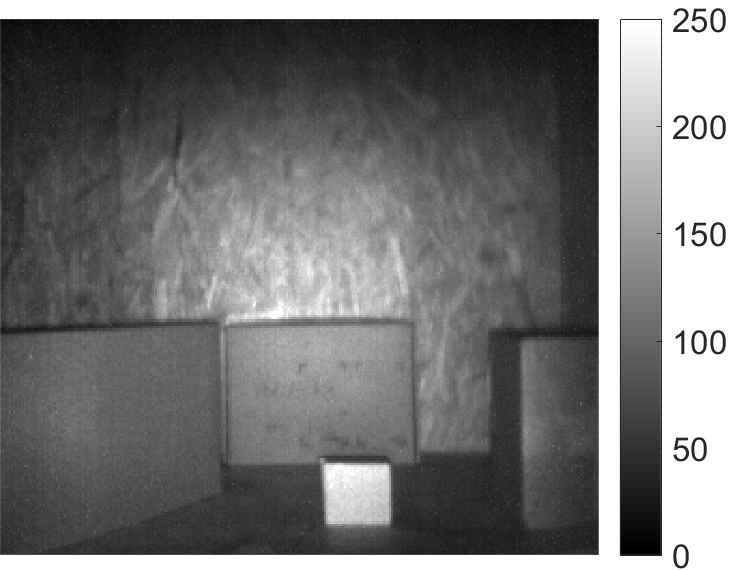

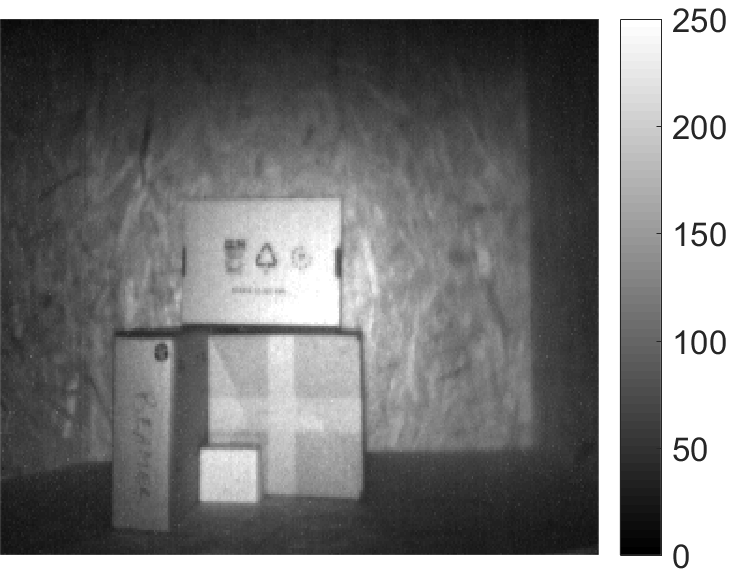

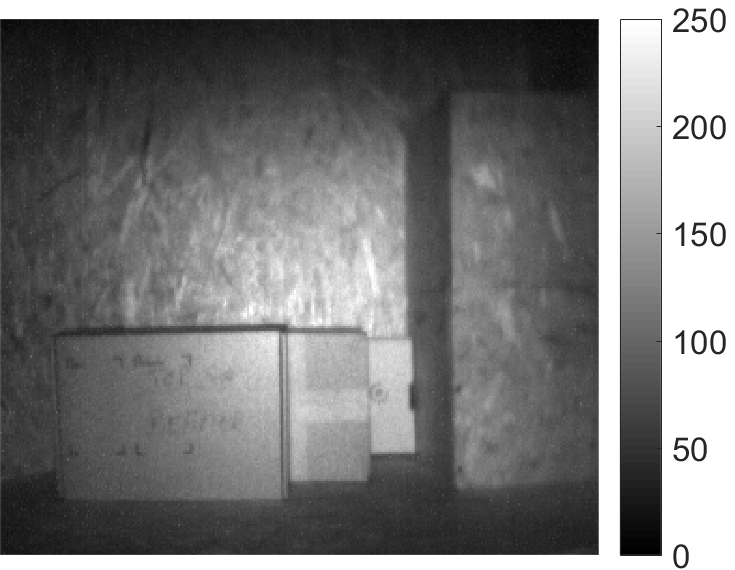

- the archive "S5.zip" contains the depth, amplitude and intensity maps (".mat" files) captured with the ToF camera at 10, 20, 30, 40, 50 and 60 MHz on the 8 scenes contained in the S5 real test set, the "Box dataset". The ToF data are recorded in a laboratory with no external illumination. This dataset is provided with the depth ground truth and it is used for the testing of the proposed Domain Adapted CNN for ToF data denoising.

| Scene 0 | Scene 1 | Scene 2 | Scene 3 | ||

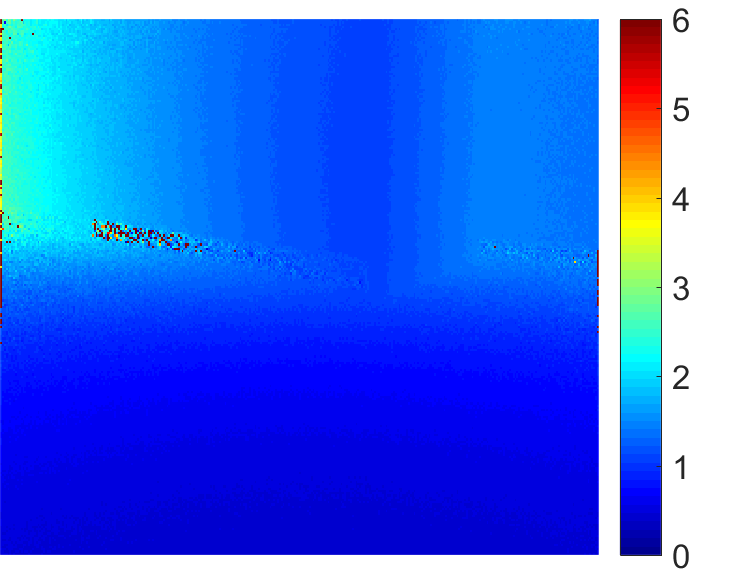

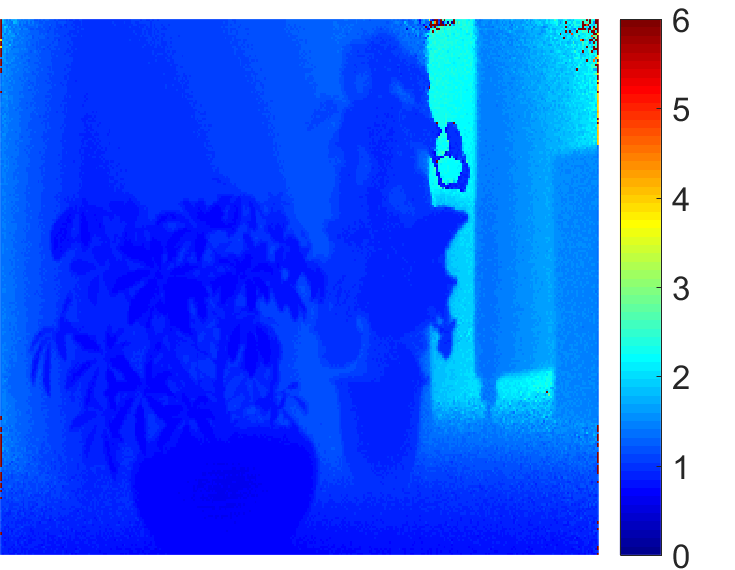

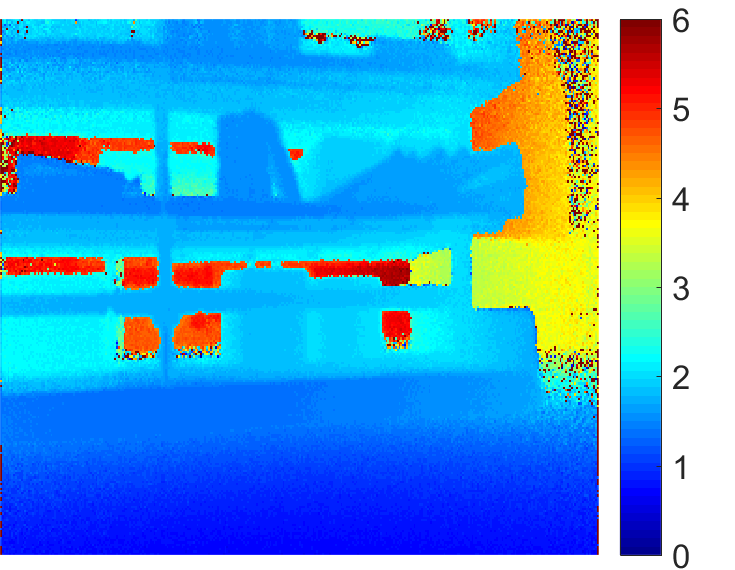

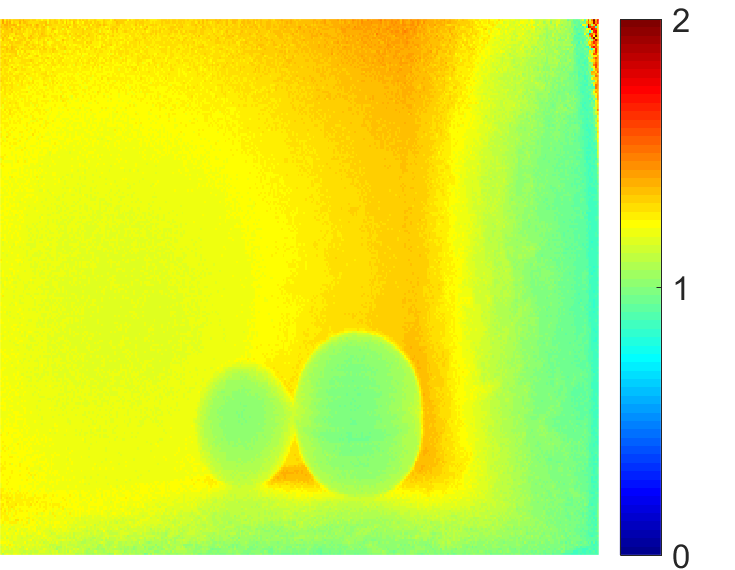

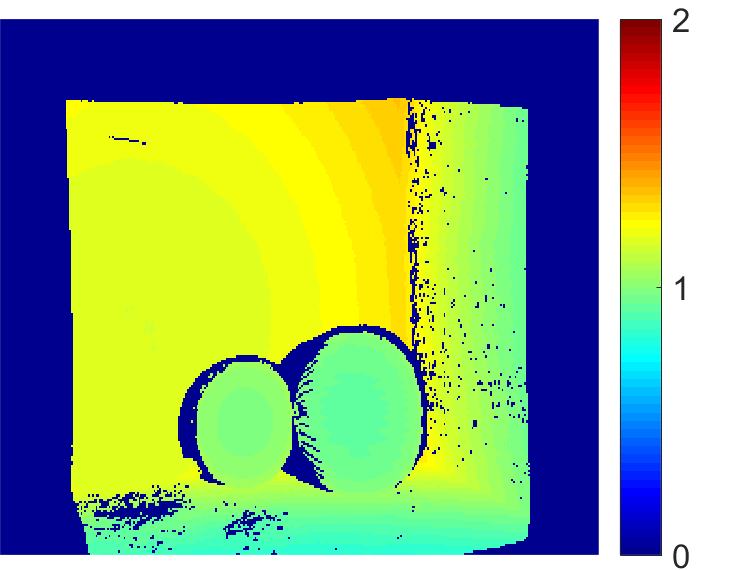

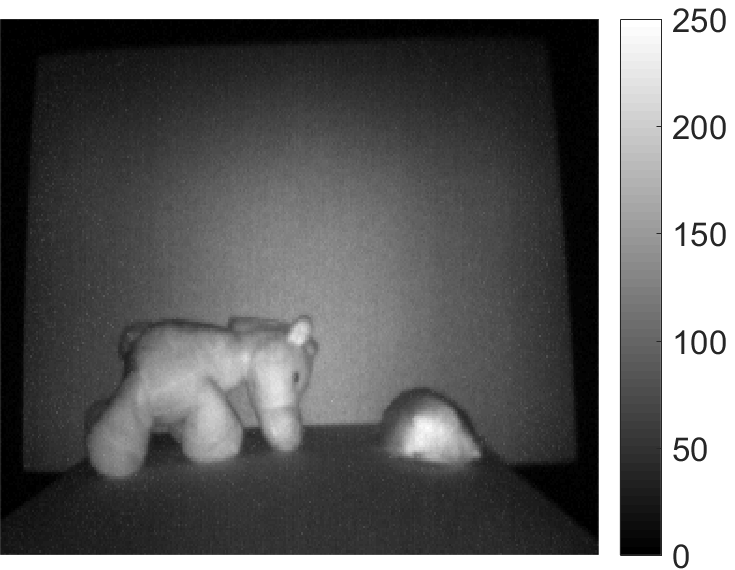

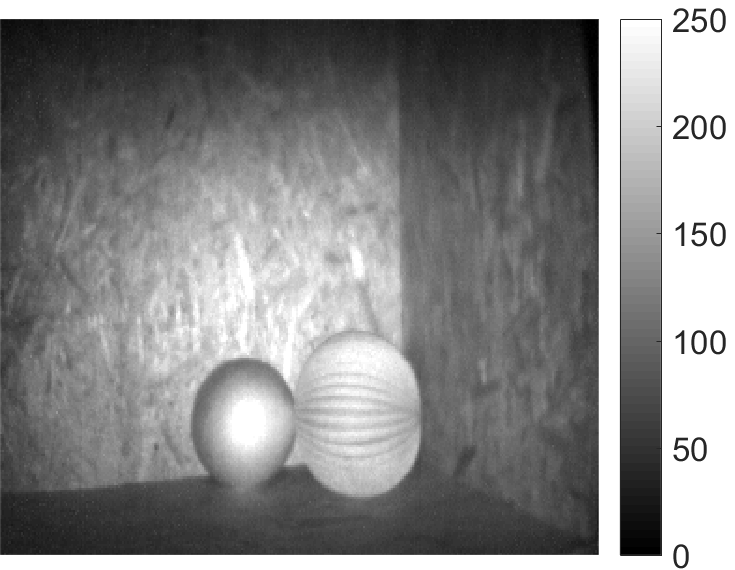

| Depth map [m] |  |  |  |  | |

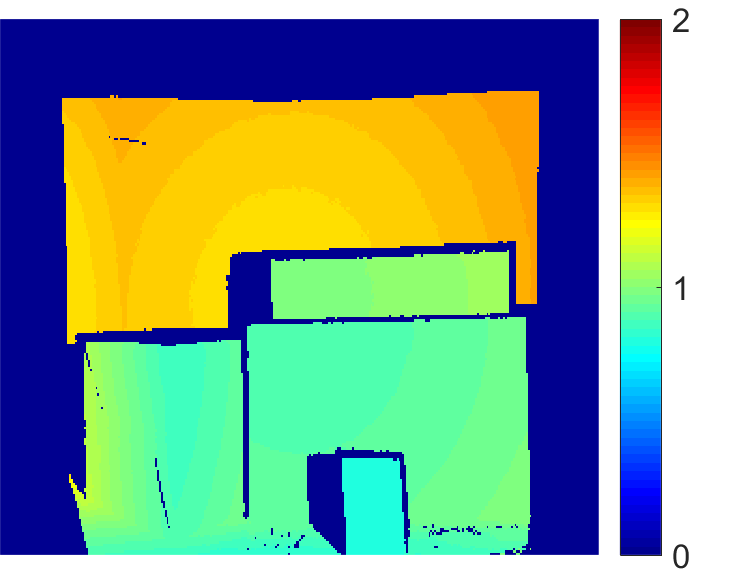

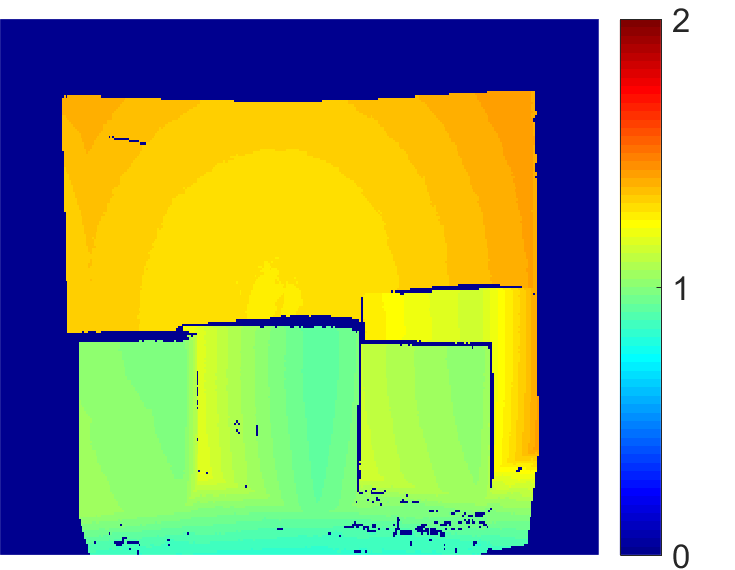

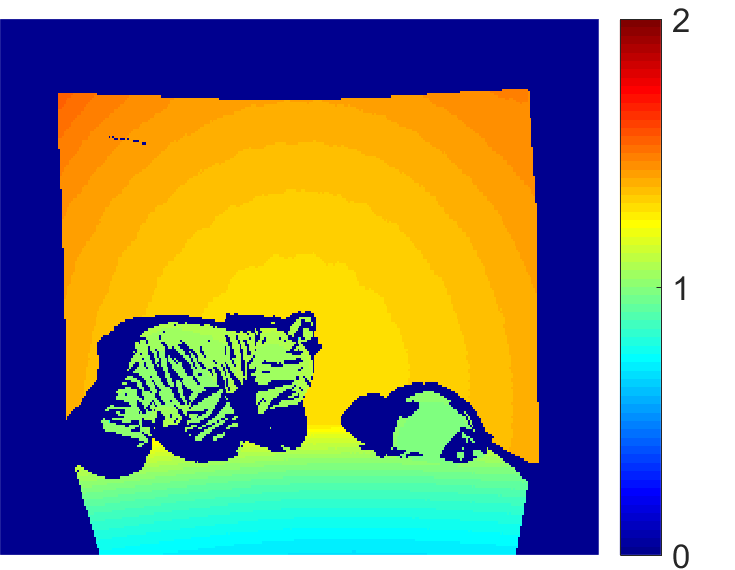

| Depth ground truth [m] |  |  |  |  | |

| Amplitude |  |  |  |  |

If you use these datasets please cite the works [1] and [2].

At the address http://lttm.dei.unipd.it/nuovo/datasets.html you can find other ToF and stereo datasets from our research group.

For any information on the data you can contact

lttm@dei.unipd.it

Contacts

For any information you can write to

gianluca.agresti@sony.com.

Have a look at the website http://lttm.dei.unipd.it

for other works and datasets on this topic.

References

[1] G. Agresti, H. Schaefer, P. Sartor, P. Zanuttigh, "Unsupervised Domain Adaptation for ToF Data Denoising with Adversarial Learning", International Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

[2] G. Agresti and P. Zanuttigh, Deep learning for multi-path error removal in ToF sensors, Geometry Meets Deep Learning, ECCVW18, Munich, Germany, 2018.

xhtml/css website layout by Ben Goldman - http://realalibi.com