Paper

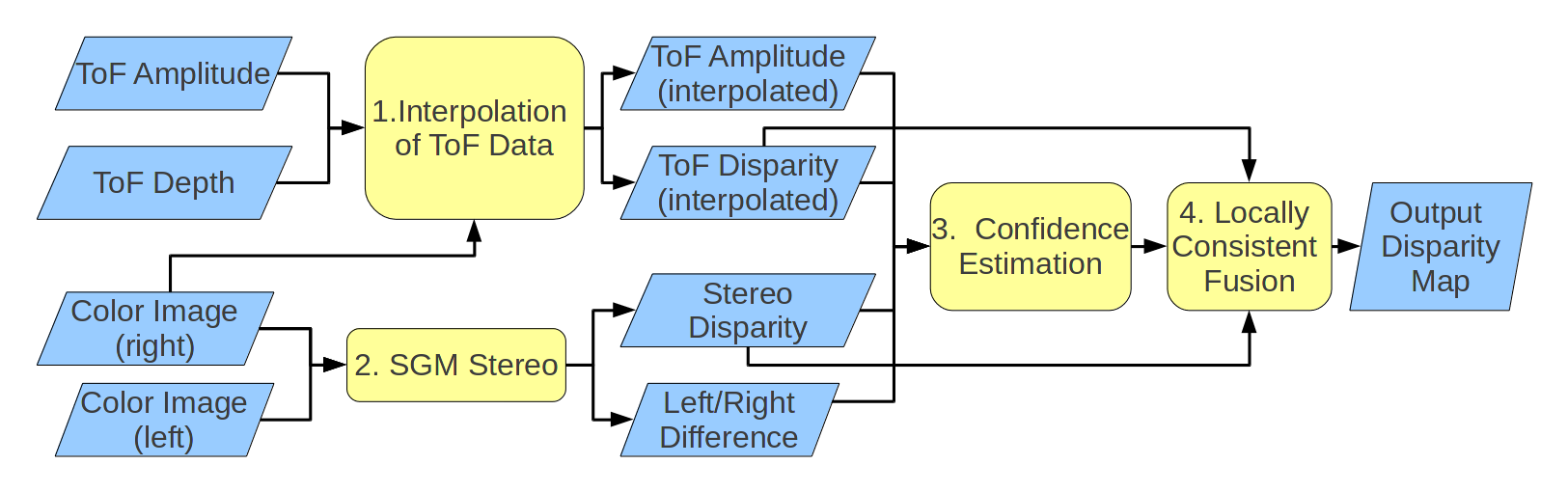

The paper proposes a novel framework for the fusion of depth data produced by a Time-of-Flight (ToF) camera and a stereo vision system. The key problem of balancing between the two sources of information is solved by extracting confidence maps for both sources using deep learning. We introduce a novel synthetic dataset accurately representing the data acquired by the proposed setup and use it to train a Convolutional Neural Network architecture. The machine learning framework estimates the reliability of both data sources at each pixel location. The two depth fields are finally fused enforcing the local consistency of depth data taking into account the confidence information [2]. Experimental results show that the proposed approach increases the accuracy of the depth estimation.

The full paper can be downloaded from here

|

Flowchart of the proposed method

|

Dataset

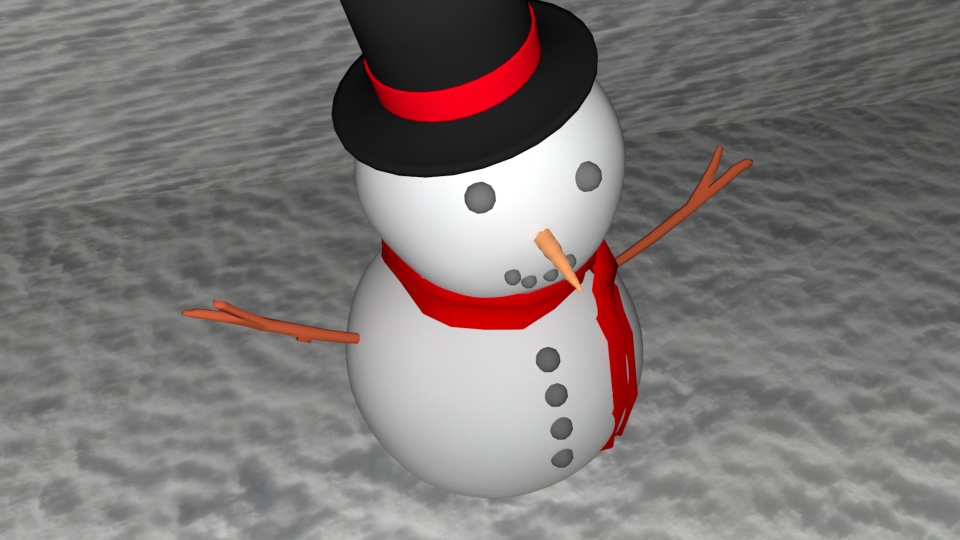

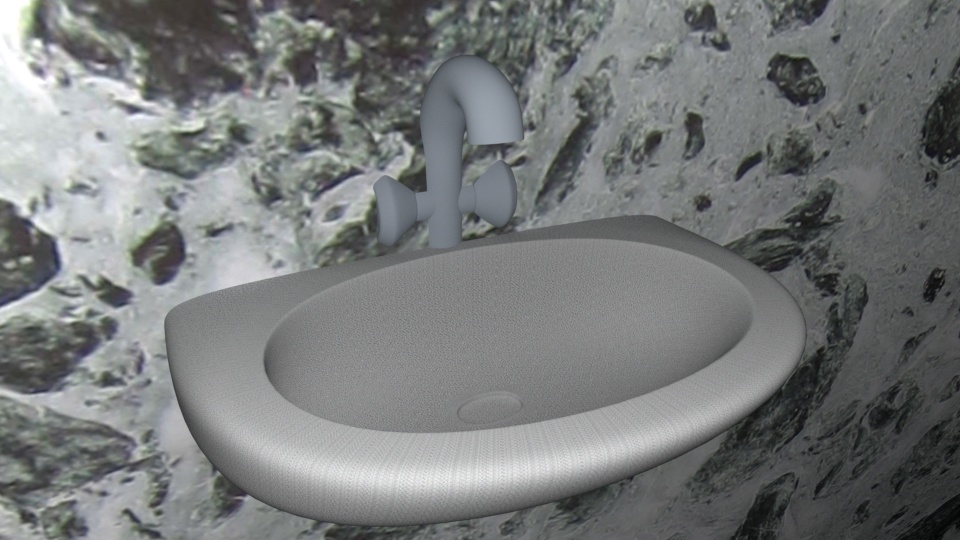

We provide a new synthetic dataset called SYNTH3 specifically developed for machine learning based Stereo-ToF fusion applications. The dataset is split in two parts, a training set and a test set. The training set contains 40 scenes obtained by rendering 20 unique scenes from different viewpoints while the test set is composed by 15 unique scenes. The various scenes contain furnitures and objects of various shapes in different environments e.g., living rooms, kitchen rooms or offices. Furthermore, some outdoor locations with non-regular structure are also included in the dataset.They appear realistic and suitable for the simulation of Stereo-ToF acquisition systems

We have virtually placed in each scene a stereo system with characteristics resembling the ones of the ZED stereo camera and a ToF camera with characteristics similar to a Microsoft Kinect v2. To this purpose, we used a simulator realized by Sony EuTEC starting from the work of Meister et al. [3].

For each scene sample in the dataset, the following data are provided: (NEW: the dataset has been updated and extended and now contains also reprojected data and the ToF acquisition at different frequencies)

- The 512x424 ToF depth map.

- The 960x540 ToF depth map projected on the reference camera of the stereo system.

- The 512x424 ToF amplitude images captured at 16, 80 and 120 MHz respectively.

- The 960x540 ToF amplitude image captured at 120 MHz and projected on the reference camera of the stereo system.

- The 512x424 ToF intensity images captured at 16, 80 and 120 MHz respectively.

- The ground truth depth map w.r.t. the ToF point of view.

- The 1920x1080 color image acquired by the left camera of the stereo system.

- The 1920x1080 color image acquired by the right camera of the stereo system.

- The 960x540 disparity and depth maps estimated from the color images of the stereo system w.r.t. the right camera.

- The ground truth depth and disparity maps w.r.t. the right camera of the stereo system.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Scene samples from the test set, color images acquired by the right camera of the stereo system

(images at a lower resolution for display purposes only)

Download

- Training set (further information about the data is available here)

- Test set (further information about the data is available here)

- Estimated confidence maps Confidence maps for both stereo and ToF system computed on the test set with the proposed CNN (the darker the more reliable)

- Calibration parameters

If you use the dataset please cite [1].

At the address http://lttm.dei.unipd.it/nuovo/datasets.html you can find other ToF and stereo datasets from our research group.

Contacts

For any information on the data you can write to lttm@dei.unipd.it . Have a look at our website http://lttm.dei.unipd.it for other works and datasets on this topic.

References

[1] G. Agresti, L. Minto, G. Marin and P. Zanuttigh, "Deep Learning for Confidence Information in Stereo and ToF Data Fusion", 3D Reconstruction meets Semantics ICCV Workshop, 2017.[2] G. Marin, P. Zanuttigh, and S. Mattoccia, "Reliable fusion of ToF and stereo depth driven by confidence measures", European Conference on Computer Vision, 2016.

[3] S. Meister, R. Nair, and D. Kondermann, "Simulation of Time-of-Flight Sensors using Global Illumination". In M. Bronstein, J. Favre, and K. Hormann, editors, Vision, Modeling and Visualization, The Eurographics Association, 2013.

xhtml/css website layout by Ben Goldman - http://realalibi.com