|

|

Home Page

People

Research Areas

Publications

Datasets

Events

Projects

Teaching

Spin-Off

|

|

|

--

--

Depth data acquired by current low-cost real-time depth cameras

provide a more informative description of the hand pose that can be

exploited for gesture recognition purposes. Following this

rationale, we proposed

a novel hand gesture recognition scheme based on depth information.

The basic

framework [1,2] is the following.

Color and

depth data are firstly used together to extract the hand and divide it into palm and

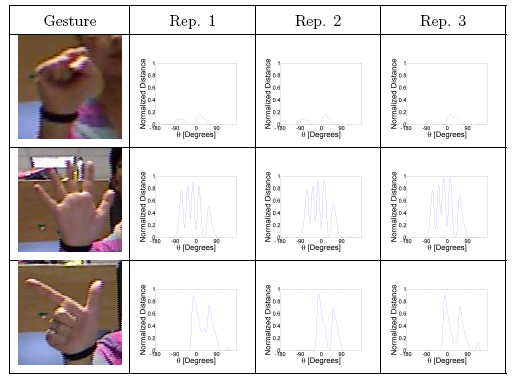

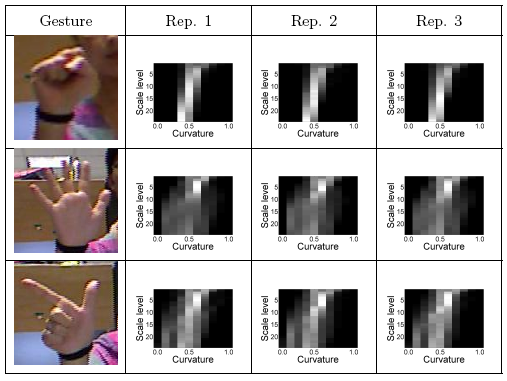

finger regions. Then different sets of feature descriptors are extracted

accounting for different clues like the distances of the �fingertips from the

hand center, the curvature of the hand contour

or the geometry of the palm region. Finally a multi-class SVM classifier is

employed to recognize the performed gestures. Experimental results demonstrate

the ability of the proposed scheme to achieve a very high accuracy on

both standard datasets and on more complex ones acquired for experimental

evaluation. The current implementation is also able to run in real-time.

We have also proposed several other

solutions, including variations of the original approach, novel

schemes and schemes based on different sensors:

-

In [3] an improved

version of the palm detection scheme is proposed.

-

Different machine learning schemes are proposed in

[4] to improve the recognition accuracy

-

Color-based descriptor are combined with the

depth-based ones in [5]

-

A

review of several feature extraction schemes is

presented in [6]

-

The use of a Leap Motion sensor together with a

depth sensor is discussed in [7,8]

-

In a different approach [9] the curvature of the

hand shape is used to separate the palm from the

fingers. Then density-based clustering is used

together with a linear programming approach to

separate the various fingers

-

The

approach of [10], targeted to real-time ego vision

systems, exploits descriptors based on the hand

silhouette, in particular the curvature of the

contour and features based on the distance transform.

Synthetic data obtained by an ad-hoc library made

available on this website has been used for the

training stage. The approach has been included into

an augmented reality system including also an head

mounted display presented in [11]

.

Related Papers:

[1] F. Dominio, M. Donadeo, P. Zanuttigh

--------

"Combining multiple depth-based descriptors for hand

gesture recognition"

--------

Pattern Recognition Letters,

vol. 50, pp. 101-111, 2014

--------[

Dataset Page

]

----[2]

F. Dominio, M. Donadeo, G. Marin, P. Zanuttigh, G.M.

Cortelazzo,

--------

"Hand Gesture Recognition with Depth Data"

-------- ACM Multimedia Artemis

workshop, 2013

Barcelona, Spain, October 2013.

-------- [

Dataset Page ] [

Presentation ]

[3] G. Marin, M.

Fraccaro, M. Donadeo, F. Dominio, P. Zanuttigh,

"Palm area detection for reliable hand gesture recognition"

Proceedings

of MMSP 2013

--------Pula, Italy,

October 2013.

[4] L. Nanni, A. Lumini, F. Dominio, M. Donadeo and P.

Zanuttigh,

"Ensemble

to improve gesture recognition",

to appear on International

Journal of Automated Identification Technology

[5] L. Nanni, A. Lumini, F.

Dominio, M. Donadeo and P. Zanuttigh,

"Improved Feature Extraction and Ensemble Learning for Gesture

Recognition"

to appear in

Advances in Machine Learning Research, Nova Science Publishers, 2014

[6]

Fabio Dominio, Giulio Marin, Mauro Piazza, Pietro Zanuttigh,

"Feature

Descriptors for Depth-Based Hand Gesture

Recognition",

In "Computer

Vision and Machine Learning with RGB-D Sensors",

pp 215-23,

Springer International Publishing, 2014

[7] G. Marin, F. Dominio, P. Zanuttigh,

"Hand

gesture recognition with Leap Motion and Kinect devices,"

IEEE International Conference on Image Processing (ICIP),

pp.1565-1569, Paris, France, Oct. 2014

[ Dataset Page ]

-------

[8] G. Marin, F. Dominio, P. Zanuttigh,

"Hand Gesture Recognition with Jointly Calibrated Leap Motion and Depth Sensor,"

Multimedia Tools and

Applications, 2015

[ Dataset Page ]

-------

[9]

L. Minto, G. Marin, P.

Zanuttigh,

"3D

Hand Shape Analysis for Palm and Fingers

Identification,"

International Workshop on Understanding Human

Activities through 3D Sensors (FG2015

Workshop), Ljubljana , Slovenia, May, 2015

[10] A.Memo, L. Minto, P. Zanuttigh,

"Exploiting

Silhouette Descriptors and Synthetic Data for

Hand Gesture Recognition,"

Smart Tools

and Apps in computer Graphics, Verona, October

15,16 2015

[ Dataset Page ]

-[

Synthetic rendering library ]

-

[11] A.Memo, P. Zanuttigh,

"Head-mounted

gesture controlled interface for human-computer

interaction,"

Multimedia Tools and Applications, 2017

[ Dataset Page ]

-[

Synthetic rendering library ]

For all the publications:

the copyright belongs to the corresponding publishers. Personal use of this

material is permitted. However, permission to reprint/republish

this material for advertising or promotional purposes or for

creating new collective works for resale or redistribution to

servers or lists, or to reuse any copyrighted component of this

work in other works must be obtained from the publisher

|

|