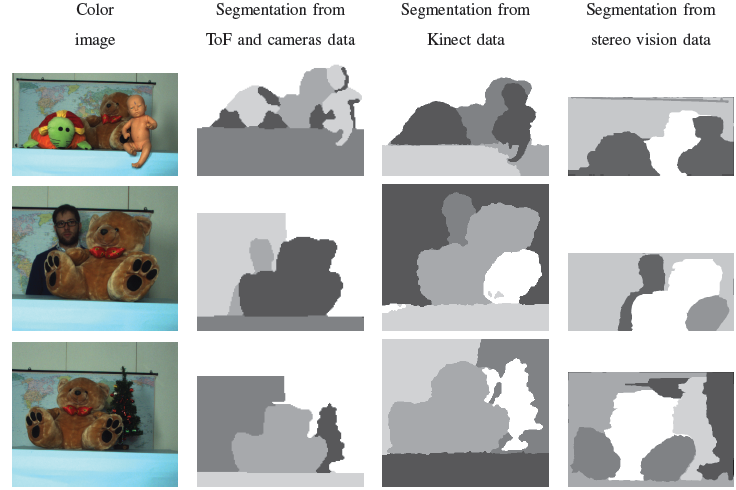

Scene segmentation is a well-known problem in computer vision traditionally tackled by exploiting only the color information from a single scene view. Recent hardware and software developments, like Microsoft's Kinect or new stereo vision algorithms, allow to estimate in real-time scene geometry and open the way for new scene segmentation approaches based on the fusion of both color and depth data. We proposed a novel segmentation scheme where multi-dimensional vectors are used to jointly represent color and depth data and various clustering techniques are applied to them in order to segment the scene. Furthermore we addressed the critical issue of how to balance the two sources of information by using an automatic procedure based on an unsupervised metric for the segmentation quality. Different acquisition setups, like Time-of-Flight cameras, the Microsoft Kinect device and stereo vision systems have been used inside this framework. Experimental results show how the proposed algorithm outperforms scene segmentation algorithms based on geometry or color data alone and also other approaches that exploit both clues. We also proposed an improved segmentation scheme that exploits also a 3D surface estimation scheme [4,5,6,7]. Firstly the scene is segmented in two parts using the previously described approach. Then a NURBS model is fitted on each of the computed segments. Various metrics evaluating the accuracy of the fitting are used as a measure of the plausibility that the segment represents a single surface or object. Segments that do not represent a single surface are split again into smaller regions and the process is iterated in a tree-structure until the optimal segmentation is obtained. In [6] a region merging scheme starting from an over-segmentation is exploited, while in [7] the approach is combined with a deep learning framework. In [8] the approach of [7] has been extended with a more advanced deep learning architecture. Furthermore in this work we addressed not

only the segmentation but also the semantic labeling task. Since a standard dataset is not available for the evaluation of

approaches exploiting both color and depth data, the datasets used for

the experimental validation have been made available here in

order to allow other researchers to compare and evaluate joint

segmentation approaches. In [10] a novel domain adaptation technique is developed to perform semantic segmentation of real driving scenarios starting from an initial training performed on synthetic datasets. [1] C. Dal. Mutto, P. Zanuttigh,

G.M. Cortelazzo, [2] C. Dal Mutto; S. Mattoccia, P. Zanuttigh, G.M.

Cortelazzo, [3] C. Dal Mutto, F. Dominio, P. Zanuttigh, S.

Mattoccia, [4] G.Pagnutti, P. Zanuttigh, [5] G.Pagnutti, P. Zanuttigh,

[6] G.Pagnutti, P. Zanuttigh,

[7] L. Minto, G.Pagnutti, P. Zanuttigh, [8] G.Pagnutti, L. Minto, P. Zanuttigh, [9] U.Michieli, M.Camporese, A.Agiollo, G.Pagnutti, P. Zanuttigh, [10] M.Biasetton, U. Michieli, G. Agresti, P. Zanuttigh, |